This post may sound a bit sci-fi, but it also likely underestimates the trajectories of very real, over-the-horizon breakthroughs in fields like artificial general intelligence, synthetic biology, and quantum computing. Piqued your interest? Let’s chat metaverse.

IQT has been evaluating metaverse, e.g., spatial computing, technologies for the past several years1,2,3, and we predict the next iteration of the internet will be immersive. The metaverse will be experienced through interfaces that extend virtual reality and digital twins (digital representation of a real-world systems) into physical spaces. Consumers will be able to seamlessly operate through low-profile glasses and brain-computer interfaces. Developers will use spatial computing and AI to program metaverse experiences. Recent advances in AI already have made populating the metaverse with hyper-realistic experiences, characters, and worlds a trivial task.

Why does the metaverse matter?

The metaverse could become the ultimate tool of persuasion, or the most interesting, creative place for consumers – though it will likely fall somewhere in between. For the national security community to operate in the metaverse, it needs to be involved in its development. We can start by modeling applications of the latest AI breakthroughs.

Large language models are now advancing chat agents, virtual influencers, and conversational search. In fact, chatbots developed by Google’s Language Model for Dialogue Applications (LaMDA) mimic speech based on trillions of words ingested from the internet. In June 2022, an engineer on Google’s Responsible AI team testing the chatbot became convinced that the LaMDA was enabling consciousness in the chatbots. Since the December 2022 release of OpenAI’s ChatGPT which took the world by storm, then Microsoft’s resulting announcement to integrate ChatGPT into Bing, Google has responded by releasing LaMDA as a competitive search capability called Bard.

Emergent behaviors of human-machine interfaces and interactions with AI becoming hyper-realistic are pervading our experiences at a much faster pace than expected. OpenAI’s ChatGPT currently uses the large language model GPT-3.5+ to generate reasonable explanations and content to any question or descriptive task asked. For instance, I can ask ChatGPT to write me a few paragraphs on the history of metaverse in style of Jack Kerourac (Figure 1), and it remarkably produced the following.

ChatGPT is by far the best hack I’ve introduced to my writing workflows (I promise this post was not augmented by ChatGTP in any way). Generative AI techniques will very likely dominate the next five years of consumer interface innovation, as venture capital floods investment in the space, and we realize a vast landscape of applications that, for instance accelerate creative professions.

In a similar manner to LaMDA, companies like Personal AI and Korbit are training chatbots against the corpus of users’ written (email, Tweet, post), image, and video records. Their AI platforms can not only reproduce the context of what a bot trained from your data might return when queried, but also synthesize your conversational style and mannerisms. More recently Google’s Tacotron2 uses WaveNet to obtain a short amount of audio to train a viable artificial voice that mimics the speaking style and tone of an individual. We can now host remarkedly intelligent AI agents augmented with GPT3, trained over minimal amounts of your data, that can synthesize your speech, intonation, as well as verbal and written style and mannerisms.

What concerns surround the metaverse?

It is uncanny how easily these models can resemble your humanity, even while they are trustless and do not have the ability to truly comprehend meaning. In fact, researchers recently developed a technique called Silicon Sampling which used GPT3 to train agents against a corpus of political and religious belief structures. They were able to simulate both individual people and synthetic populations very accurately at scale and then poll the agents across a set of voting metrics. We increasingly complain about how bad election polls are because samples are hard to collect, but how many people do you know would answer an unknown phone number or take time to answer a poll?

I predict silicon sampling will soon beat most polling techniques. Researchers could create virtual citizens to design impactful social science experiments as it matures. Companies could target election campaign messages or precision product marketing ads at an unforeseen granular and specified scale. Nation states also will optimize and target propaganda and disinformation at scale, e.g., simulate large-scale information warfare and influence operations, before deploying them.

As synthetic personas in the metaverse become hyper-realistic, it may be very difficult for a user to know when they are interacting with a human being or an AI. Companies like InWorld AI and NPCx are developing non-player characters (NPCs) with unique personalities, memories, and experiences, again augmented from large language models like GPT3. As such, their AI-based platforms are designed to populate immersive realities including the metaverse, VR/AR, games, and virtual worlds. InWorld users can exert fine-grain control over the behavioral and cognitive features of their NPCs, as well as how they are perceived in areas as diverse as speech, gestures, personality, and event triggers. These companies can integrate their NPC architecture into powerful simulators like Unreal Engine’s Metahuman creator. This will not only allow the NPCs to have finely grained personalities, advanced speech, and behavioral characteristics, but they will also resemble high-resolution human-like avatars.

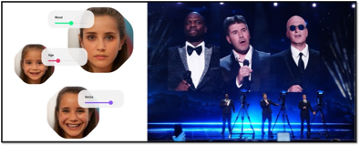

Metaphysic’s Every Anyone allows users to create hyperreal 3D avatars of themselves based on simple smartphone captures. While the terms of use and ownership of these assets are unclear, the company intends to mint these as non-fungible-tokens, so users can certify “ownership” of their avatar. Nevertheless, by running Metaphysic’s models through volumetric cameras, the company can reproject live, deepfake, video avatars of others’ likeness, expression, and mannerisms. The company showcased its technology in the “America’s Got Talent” finals, whereby live singers were reprojected as the show’s judges. Live volumetric reprojection currently requires specialized hardware, which may be satisfied by next generation smart phone and XR headsets.

The ability to own, modify, and reproject one’s likeness as someone else’s in real-time poses both fascinating disruptive capabilities and several novel attack surface vectors. If one’s identity was stolen or used nefariously, it could be difficult to repudiate the truth from visual records. Within social media platforms, social engineering could occur from someone using an avatar by impersonating an influencer, by scamming fans, damaging reputation, or spreading disinformation.

Not being able to repudiate or know who you are communicating with has been a problem since the birth of the internet. The dimensions of this problem expand in the metaverse when interacting with other hyperreal avatars that could be deepfaked humans, intelligence NPCs, or synthetic populations with targeted messages and agendas.

How could the metaverse impact national security and beyond?

Platform providers could implement an active notification system that labels and identifies AI and deepfakes to users. Systems like MicroSoft’s DeepSigns implement a similar watermarking system, though mainly for trademark use cases. Without regulation, this would be a voluntary control decided by platforms, likely bifurcated across U.S., European or authoritarian regimes. With regulation, it would be exploited by numerous countermeasures.

Repudiating the virtual identity of users is particularly problematic. The integration of biomarkers from brain computer interfaces (BCI) will be critical in securely identifying users. For example, the company Arctop develops a hardware-agnostic stack that fingerprints brain wave (EEG) signals that is being used to authenticate into headsets, and continuously pulses the signature to test whether the user is still present. Arctop can also be deployed to capable hardware that reads EEG, like earbuds.

BCIs are well on their way to being fully integrated into our immersive experiences. Meta’s Quest Pro includes eye and facial expression tracking and will allow developers to track key biometrics like attention, gaze, and pupil dilation. Pison’s electro-muscular sensor/chip will integrate with smart watches to use free-form hand gestures and finger snaps to control experiences. These ubiquitous surveillance devices will be able to track posture and gate to infer emotional information about how the user is reacting in real-time. They will further create a biometric digital twin that tracks facial expressions, vocal inflections, eye motions, pupil dilation, vital signs, blood pressure, heart, and respiration rates, which will create extensive profiles that can be used to trigger emotional responses.

There is no regulatory regime for these biometric signals that are on the path to be owned, controlled, and licensed by platform providers. If the metaverse adopts the perverse incentives of the “freemium” models that were developed by 2D internet social networks, where users are the product that algorithms compete for their attention to serve ads and influence, the metaverse will become the ultimate tool of persuasion.

It is imperative that regulators act to develop sensible guardrails and incentives that prevent metaverse platforms from adopting the predatory business models that are ingrained in social media. Since metaverse business models are still being developed, now is the best time for regulators to implement sensible guardrails that help the industry from competing to create the most persuasive advertising. Guardrails could prevent an arms race on extensive biometric profile creation for influence manipulation and rather foster competition towards making the metaverse the most interesting, creative place for consumers.

Generative AI & the metaverse

I would be remiss not to mention the power of generative AI and its application in building metaverse platforms. Recent breakthroughs in generative AI enable the capability of efficient text-to-image conversion and is exemplified by the Stable Diffusion model. Stable diffusion uses a type of image generation that gradually builds a coherent image from a noise vector by gradually modifying it over several steps. As a result, a user can simply enter a text prompt like “two-story, midcentury modern, grey and green house, over a cliff surrounded by the ocean during sunset,” and receive a photo-realistic generated output. This generative AI technique has been extended to text-to-video use case and now can be used to create short videos.

Diffusion models are rapidly becoming more spatially sophisticated and hyper-realistic, and as such, they will be able to instantly generate three-dimensional, ad-hoc digital assets, scenes, and interactive experiences. In short as they evolve, in a similar manner to Star Trek’s holodeck, where users will be able to intuitively ask these models to populate the digital assets and experiences they want.

It is however difficult to overestimate the disruptive nature of generative AI extending not only into metaverse platforms but across countless industries that will drive one of the most impactful waves of new technology over the next five years. Generative AI technology will impact, enable, and potentially compete with numerous other design professions where form meets function. For instance, while animators could rapidly design new scenes for a film, architects can instantly create novel structures. These models can be used to create new digital logos and graphics instantly and intuitively for presentations – in fact Microsoft plans to replace Clip Art with text-to-image in their Office tools.

Why it all matters

We are at the convergence of the diffusion models building out virtual worlds, with large language models powering the brains of synthetic characters. BCIs can help us initially differentiate humans from machines, but the costs of providing our bio-signatures to unregulated tech are high. In fact, if status-quo ad-serving, attention economy business models predominate to be what builds 3D-biomarked applications, the metaverse will become the ultimate vector for immersive disinformation and persuasion.