We imagine what capabilities an Explainable AI (XAI) black box would need in order to emulate Penn & Teller’s style of explanation.

Introduction

Penn & Teller: Fool Us is an American television show. The hosts — Penn Jillette and Teller (P&T) — are renowned magicians who have performed together for several decades. On the show, magicians from all over the world are invited to perform a magic trick for P&T. If neither host can explain how the trick was done to the satisfaction of the magicians, the magicians have successfully fooled P&T. Over the first six seasons (season 7 has just commenced), 298 magicians have attempted to fool P&T with 77 foolers, yielding a successful explanation rate of 74%.

Beyond amazing magic tricks, this show is about generating conversational explanations in an adversarial setting. By dissecting how P&T explain magic tricks, we can develop intuition about architecting Explainable AI (XAI) systems.

Beyond amazing magic tricks, this show is about generating conversational explanations in an adversarial setting. By dissecting how P&T explain magic tricks, we can develop intuition about architecting Explainable AI (XAI) systems.

The most important aspect of each episode is the explanation of the trick. It would be a simple matter for P&T to explain the trick in plain language, but this would violate an unwritten rule in the magic community, which largely believes in keeping the mechanics of magic tricks hidden from public view. They explain the trick coded in magic jargon so as to not give away the mechanics.

They explain the trick coded in magic jargon so as to not give away the mechanics.

There are four typical structures of explanations.

- Type 1: You did not fool us. Here is how you did the trick (presented in magic jargon).

- Type 2: We are uncertain. This is how we think you did it. If you agree with our reasoning, you did not fool us.

- Type 3: You partially fooled us. There is a part of the trick that we were not able to decipher.

- Type 4: You completely fooled us. We have no idea how the trick was done.

A Type 3 or 4 explanation results in a fooling.

Four tricks: a glimpse of an ontology of magic

This is the fun part of the article (wherein you are allowed to watch TV for science). Each section below represents an instance of one type of explanation.

Exact Cuts: Jimmy Ichihana (Season 6, Episode 6)

Jimmy Ichihana, who came back to the show for the second time to perform close-up card magic, does two different card routines that involve cutting a deck of cards exactly by color, suit, or number at very high speed. Given the speed at which he presents the effects, some parts of the trick fooled Penn and other parts fooled Teller, but between them they were able to decipher the trick completely and give a Type 1 explanation.

We can make two observations from this performance.

1. Replaying the input: Given the rate at which Ichihana was handling the cards and producing effects, P&T had to replay the act mentally to figure out how the trick was done.

2. Two is better than one: This is a case where having both P&T try to explain the trick was better than having either one of them alone. Consider, as a logical extrapolation of this observation, the limiting case of P&T being replaced by every magician who ever lived. In this case the probability of successful fooling is vanishingly small.

XAI systems will need both of these capabilities: replaying the entire input sequence, as well as combining multiple models (e.g., mixture of experts or ensemble learning).

Film to Life: Rokas Bernatonis (Season 3, Episode 6)

We now look at a Type 2 explanation. Rokas Bernatonis performs a trick that combines two well-known tricks called Card to Wallet and Film to Life. P&T correctly identify the two tricks and then tell Bernatonis in code that they think the combined trick has certain elements and ask Bernatonis if they are wrong. He says they are correct and therefore not fooled.

3. Explanations must quantify uncertainty: In this case, the explanation of the trick included uncertainty, and this was explicitly mentioned. XAI systems will need to quantify the uncertainty in their explanations as well.

Composition: Eric Mead (Season 4, Episode 10)

Eric Mead fooled P&T with a close-up coin magic trick that involved sleight of hand. At the outset, Mead declares that the trick he is about to perform is a variant of a Cylinder and Coins trick invented by John Ramsay. Two points emerge from the subsequent Type 3 explanation.

4. Historical knowledge and evolution of tricks: Magic has a very long history and it is reported that Teller possesses encyclopedic knowledge. A successful explanation requires recognizing that a specific trick is being performed and knowing whether it has any variants or introduces novelty beyond the original trick. During the performance, Eric Mead indicates this novelty by claiming that “I’m certain that there are a couple of beats along the way that are puzzling or mystifying to them.” It would be a lot easier to fool P&T if they did not possess this wealth of historical knowledge and a sense for how it evolved over time. This drift in historical knowledge (aka training data) is important to capture in XAI systems.

5. Mutual common knowledge: Both Mead and P&T have some knowledge of each other’s magic repertoire. Mead notes that the trick they are about to see has been performed by P&T “on this very stage”. Similarly, P&T note that “we know you and we know this trick”. This is similar to common knowledge in the game theoretic sense. XAI systems will need a way to capture common knowledge of the domain.

A successful explanation requires recognizing that a specific trick is being performed and knowing whether it has any variants or introduces novelty beyond the original trick.

Nothing to Go On: Harry Keaton (Season 6, Episode 3)

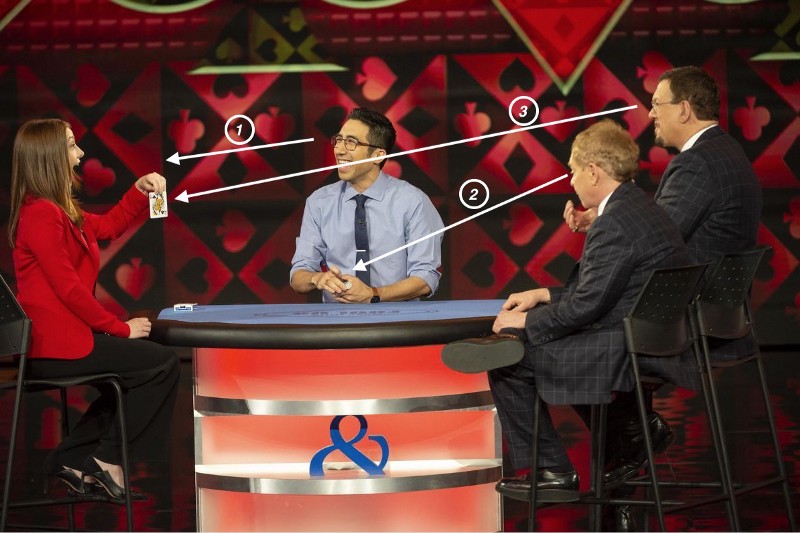

In this trick, Harry Keaton asks the emcee of the show, Alyson Hannigan, to feel objects hidden inside a minimal rectangular box. When she discloses what she thinks she is feeling, Keaton uncovers the box to reveal a completely different object. For instance, she says she feels a sponge, and Keaton reveals a small rock.

P&T had never seen anything like it before — “… we got no way to figure out anything because you invented the damn thing…” Clearly this is a Type 4 explanation.

6. Complete novelty defies explanation in conventional terms: Whether the novelty is actual or merely perceived because the trick is completely outside the P&T’s experience, it is impossible to explain the mechanics of the trick. An appropriate capability for an XAI to have in this situation is the ability to recognize that it encountered a novel out-of-distribution sample.

Summary

The entire premise of Fool Us is for P&T to explain what they think is going on in their visual and auditory fields as the performers actively try to fool them. P&T’s explanations are both domain-specific and audience-specific. In order to be relevant, explanations must take the expertise of the recipient into account. Designers of XAI systems need to recognize this important requirement.

P&T’s explanations are conversational and hew closely to the Gricean maxims of relevance, quality, and quantity. They, however, consciously violate the fourth maxim of avoiding obscurity of expression and ambiguity to protect the secrets of magic.

Finally, we note that there are two distinct stages of explanation on the show. The first is to diagnose how the trick was done and the second is to convey an explanation of that cause in conversational terms to the performing magician.

Taken together, these observations of explanations in adversarial settings suggest a generic architecture for Explainable AI Systems. A forthcoming paper will present one candidate architecture.

This article has benefited greatly from discussions with and suggestions from Wendy P, Andrea B, George S, Bob G, Zach L, Pete T, Vishal S, Kinga D, and Carrie S.

Originally published at http://github.com.

Header + footer image by George Pagan III on Unsplash.