Imagine training a self-driving car system. What would you want it to learn? Perhaps to ensure the car drives within the lanes and definitely not to cross the yellow lines. Maybe it should understand the meaning of (and act appropriately for) stop signs, speed limits, and railroad crossings. But how about the presence of pedestrians outside a crosswalk? A dog that escaped from its collar and is now chasing squirrels on the road? Or a cow that wandered off its grazing land? Very quickly, numerous scenarios (the results of fat tails) arise for which there may not be sufficient – let alone any – training data to build an effective computer vision model for this task. So, what can we do?

The promise of applying modern machine learning (ML) methods towards solving complex problems must account for these potential data issues. In our self-driving car example, it would be dangerous and nearly impossible to collect enough real-world training data to capture the myriad of driving situations. Yet, researchers have approached this problem by building training datasets using simulated collision scenarios via gaming engines (Grand Theft Auto, specifically). The study authors forced bot-agents in the game to veer towards the main player car, resulting in a fully labelled set of crash or near-crash scenarios. Many other applications which could benefit from this methodology (remote sensing, medical imaging, fraud detection in finance) are also plagued by the aforementioned data issues. This presents an opportunity for the application of synthetic data.

In this multi-part blog-post series, we delve into IQT Labs’ Synthesizing Robustness effort that explores how to best leverage and enhance synthetic data. We build upon the RarePlanes project, which examined the value of synthetic data in aiding computer vision algorithms tasked with detecting rare objects in satellite imagery. Synthesizing Robustness extends RarePlanes’ work by exploring whether Generative Adversarial Networks (GANs), a type of deep learning model that can be used to produce photo-realistic images to improve the value of synthetic data.

Synthetic Data in Use

Scientists have been using computer simulations to test ideas before running those methods on real data for nearly a century. But what happens when we use synthetic data to train complex ML systems? Deep learning models are renowned for overfitting and finding patterns specific to the data, which are not necessarily representative of the real world. To leverage synthetic data, machine learning practitioners must be aware of the potential differences resulting from this domain gap and know how minimize their effects in use.

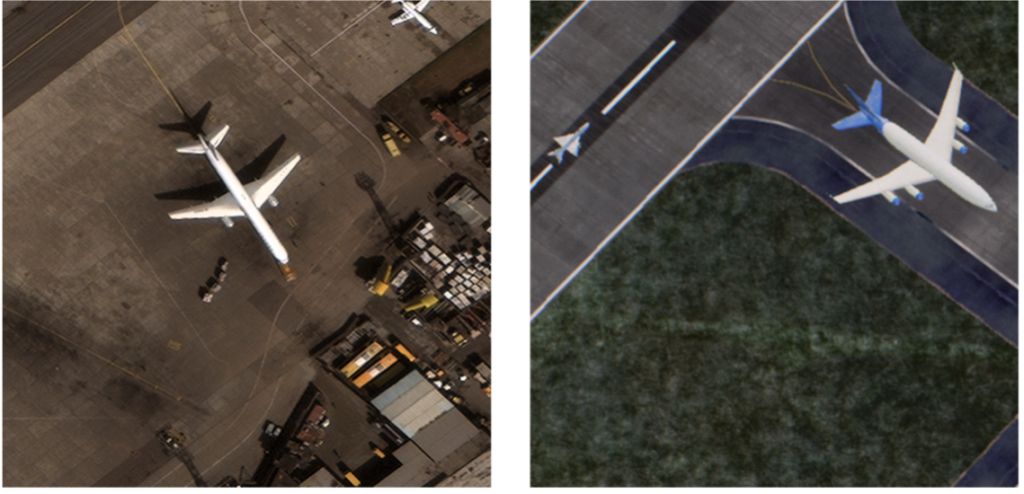

IQT Labs built the unique RarePlanes dataset that included both real imagery and synthetic satellite imagery generated using gaming engines. The RarePlanes project examined the value of synthetic data in aiding automatic detection of rarely observed objects (specifically aircraft) in satellite imagery. And while the addition of synthetic data improved rare plane detection performance, real data remains the gold standard. Simulated (game engine) data may look increasingly convincing to the human eye, but trained deep learning computer vision models may focus on background features, such as differences in the shadows and grass textures such as shown in the figure above, rather than the salient points of the aircraft themselves. We hypothesized that more realistic synthetic data may further boost performance in computer vision tasks.

GAN for Data Augmentation

Generative Adversarial Networks contributed significant advancements for data generation in recent years. Indeed, GANs can be used for image-to-image translation by training a generator network to alter low-level image features (such as textures and lighting) to match the style of a similar or related dataset. In this project we apply GAN techniques as an effective “realism-filter” to RarePlanes synthetic imagery to characterize changes to classification and detection performance using a GAN-enhanced synthetic dataset.

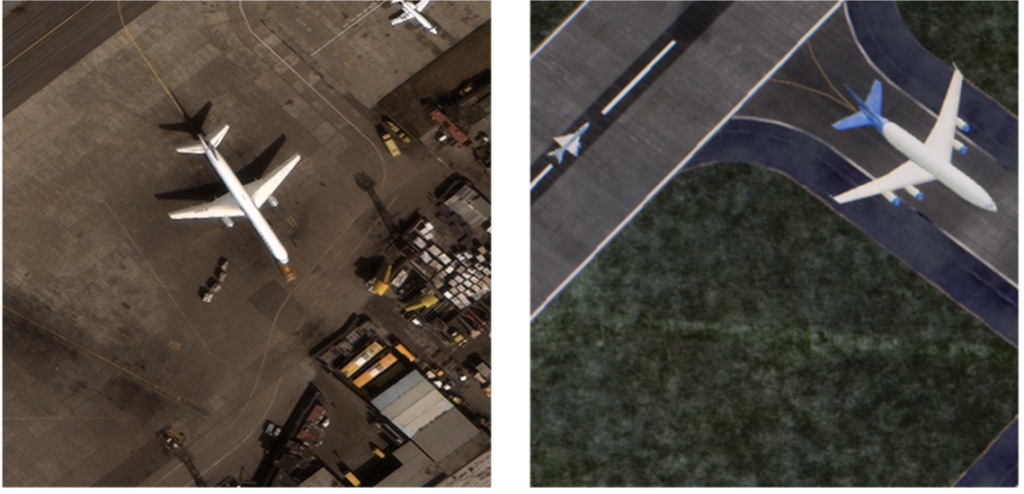

One such technique is called CycleGAN, which uses two pairs of generators and discriminators that learn how to transform images from a source domain to a target domain and vice versa. An outline of the pipeline applied to the RarePlanes dataset is shown below. Here, we take the synthetic data as the source imagery into the target domain of real images.

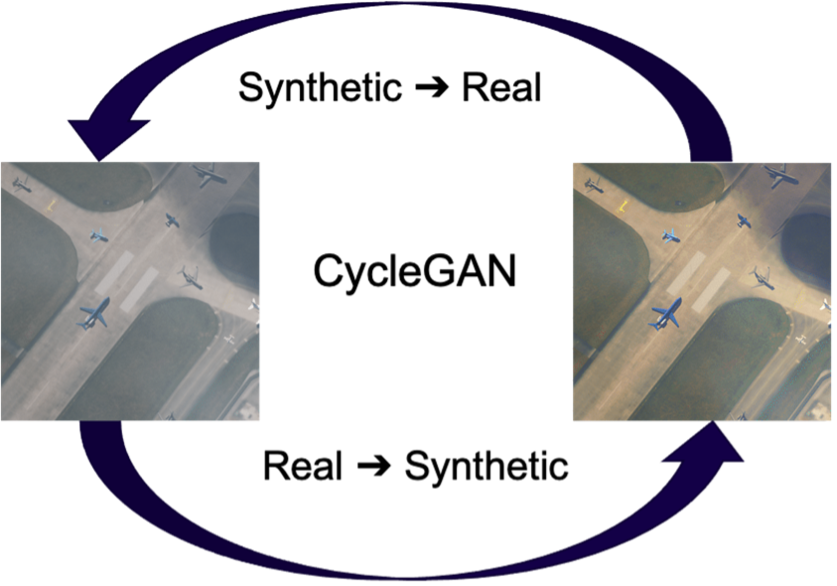

We applied the CycleGAN technique to the RarePlanes dataset, and some examples are shown below. The left column of the figure shows the original synthetic data and the right column shows the “realism-filtered” images using CycleGAN. There are global differences such as lighting: CycleGAN imitates the effect of sunlight, making the images warmer, whereas the synthetic images exhibit more of a neutral white hue. Another noticeable difference is the landscape: synthetic images tend to have more grass and the CycleGAN tends to remove it in favor of more arid landscapes. These specific examples highlight how the domain gap can be more than unrealistic renders, including even differences in physical distributions. CycleGAN is merely the first GAN that we implemented on the RarePlanes dataset, and other GAN methods may yield similar or even superior results.

Experiment Setup

- Our main goal in using a GAN for domain adaptation is to determine whether GAN application leads to improved model performance. To tackle this question, we refined the train/test splits and training process of the RarePlanes detector, with the goal of reserving rare plane makes in our dataset for downstream fine-tuning tasks of the model. More common aircraft makes could be used for pre-training en masse, as these are less relevant for training a model to detect the specific set of aircraft that fit the following criteria: the airplane make is represented in both the real and synthetic sets.

- There are at least 100 unique samples of the airplane make in the real and synthetic sets (so we have enough to include in a test partition).

There were 15 aircraft makes that met the above criteria and were considered rare. All remaining craft types were labelled with the class ‘Other.’ The pre-train dataset included all ‘Other’ craft that did not have any of the rare airplane makes and is used to first train the airplane detector as a generic airplane detector. This effectively works as a pre-training step that can then be transfer learned into the main task that interests us.

Next, this pre-trained model is further trained on the fine-tune dataset, but now the task is not only to detect airplanes but to classify the airplane make. The table below shows the number of real craft in the training portion of this fine-tune dataset, along with the supplemental synthetic images for each of those classes, which are hypothesized to improve model performance at detecting and classifying these rare craft:

| Make | # Real Images in Fine-Tune Training Set | # Synthetic Images |

|---|---|---|

| 0 (other) | 1137 | 519 |

| 1 | 236 | 124 |

| 2 | 216 | 62 |

| 3 | 140 | 72 |

| 4 | 76 | 76 |

| 5 | 74 | 59 |

| 6 | 69 | 80 |

| 7 | 45 | 69 |

| 8 | 28 | 79 |

| 9 | 19 | 75 |

| 10 | 12 | 84 |

| 11 | 11 | 73 |

| 12 | 10 | 69 |

| 13 | 8 | 80 |

| 14 | 6 | 74 |

Preliminary baseline results using YOLTv4 following this pre-train + fine-tune pipeline are presented below. As demonstrated in table 1, we have made the detection task more challenging by using as few as six examples for an airplane class. This is verified by the results in table 2. We use mean F1 score (mF1) between all 15 aircraft classes in Table 2, and report bootstrapped uncertainty estimates. We believe that this experimental setup is ideal to demonstrate the potential of synthetic data. These initial experiments using YOLTv4 indicate a slight aggregate boost to performance when including synthetic data, though further exploration into individual aircraft classes in forthcoming work may yet prove illuminating.

| Trained on Real Data | Trained on Real & Synthetic | |

|---|---|---|

| mF1 | 0.33 ± 0.03 | 0.37 ± 0.02 |

What’s to Come

We are excited to kick off the Synthesizing Robustness project with some preliminary results, but whether the inclusion of GANs result in significant improvements to model performance in plane identification and classification remains an open question. Stay tuned for our follow-on blogs where we dive much deeper into the benefits and potential pitfalls of GAN-adapted synthetic data.

This blog is the work of Felipe Mejia, Adam Van Etten, Zig Hampel, and Kinga Dobolyi. Thanks to Nick Weir for experiment design and to Jake Shermeyer for initial dataset curation.