The SpaceNet 7 Multi-Temporal Urban Development Challenge Algorithmic Baseline

Preface: SpaceNet LLC is a nonprofit organization dedicated to accelerating open source, artificial intelligence applied research for geospatial applications, specifically foundational mapping (i.e., building footprint & road network detection). SpaceNet is run in collaboration by co-founder and managing partner CosmiQ Works, co-founder and co-chair Maxar Technologies, and our partners including Amazon Web Services (AWS), Capella Space, Topcoder, IEEE GRSS, the National Geospatial-Intelligence Agency and Planet.

The SpaceNet 7 Challenge has an ambitious goal: identify and track building locations and change (i.e., construction) at the individual building identifier level, all from a deep temporal stack of medium resolution satellite imagery. As discussed in our SN7 announcement blog, this task is highly relevant to myriad humanitarian, urban planning, and disaster response scenarios. In this post we open source an algorithmic baseline for the SpaceNet 7 Challenge, as a means to both demonstrate that the proposed task is indeed feasible, and to provide a starting point that significantly lowers the barrier of entry for participants in the upcoming competition.

1. Solaris

Solaris is an open source machine learning pipeline for overhead imagery developed by CosmiQ Works. This package allows non-experts in the geospatial realm to build, train, test, and score machine learning models with a simple and well-documented workflow. Furthermore, the significant effort put into data preprocessing greatly reduces the challenges inherent in using geospatial data; for example the oft-annoying task of converting GeoJSON labels of a random coordinate reference system (CRS) into pixel coordinates or a pixel mask is trivial within Solaris. Accordingly, and unsurprisingly, we use Solaris for the baseline algorithm.

2. Data Access

As always with SpaceNet, data is freely available as part of the Registry of Open Data on AWS. All you need is an AWS account and the AWS CLI installed and configured. Once you’ve done that, simply run the command below to download the training dataset to your working directory:

cd /working_directory/

aws s3 cp s3://spacenet-dataset/spacenet/SN7_buildings/tarballs/SN7_buildings_train.tar.gz .

aws s3 cp s3://spacenet-dataset/spacenet/SN7_buildings/tarballs/SN7_buildings_test_public.tar.gz .

3. Data Prep

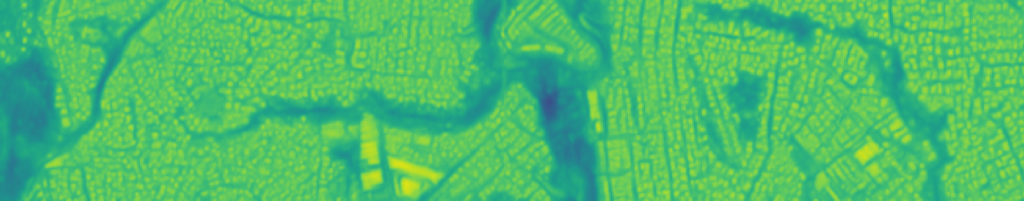

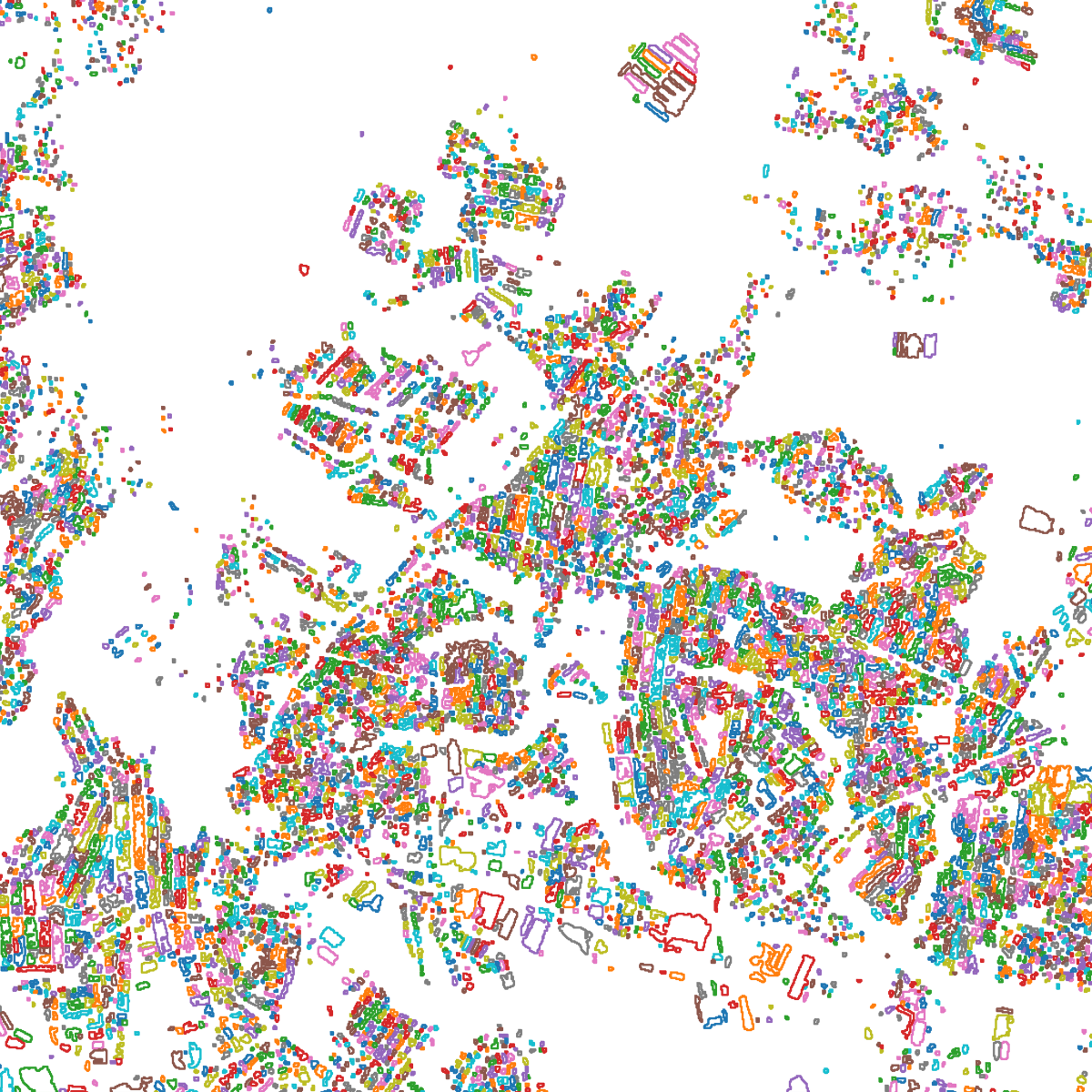

SpaceNet 7 building footprint labels are in GeoJSON format. We will use the labels_match folder, which contains both the geometry and unique identifier for each building (see Figure 1).

Once the training masks have been created, we simply create a CSV with the data locations, edit the filepaths in the Solaris .yml file, and we are ready to launch training.

make_geojsons_and_masks(name_root, image_path, json_path,

output_path_mask, output_path_mask_fbc=None)

Which will render something akin to:

Once the training masks have been created, we simply create a CSV with the data locations, edit the filepaths in the Solaris .yml file, and we are ready to launch training.

4. Training

Training is simple to launch. Once the baseline algorithm has been downloaded from GitHub and the dockerfile built, simply run:

python sn7_baseline_public_train.py

This model is based upon XD_XD’s submission to the SpaceNet 4 challenge, and features a VGG16 + U-Net architecture. Training for the full 300 epochs of the baseline model the should take 20 hours (~$60) on a p3.2xlarge AWS instance. Alternately, instead of training, one could use the pre-trained weights included in the baseline repository.

5. Inference — Segmentation Mask

Once training is completed (or pre-trained weights are selected) inference can be initiated via:

python sn7_baseline_public_infer.py

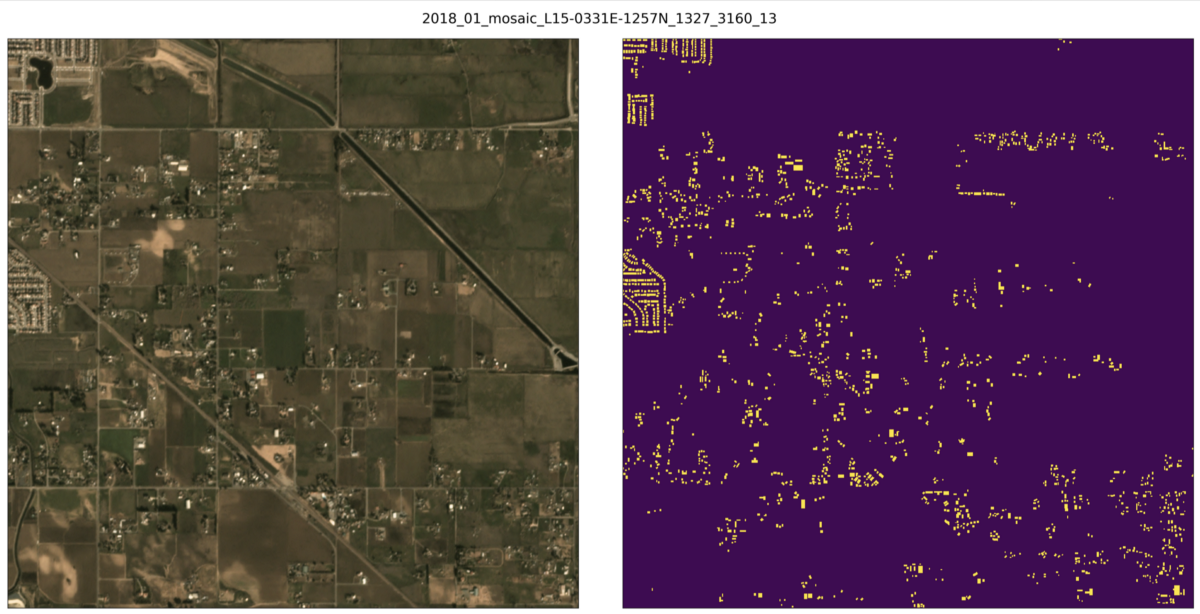

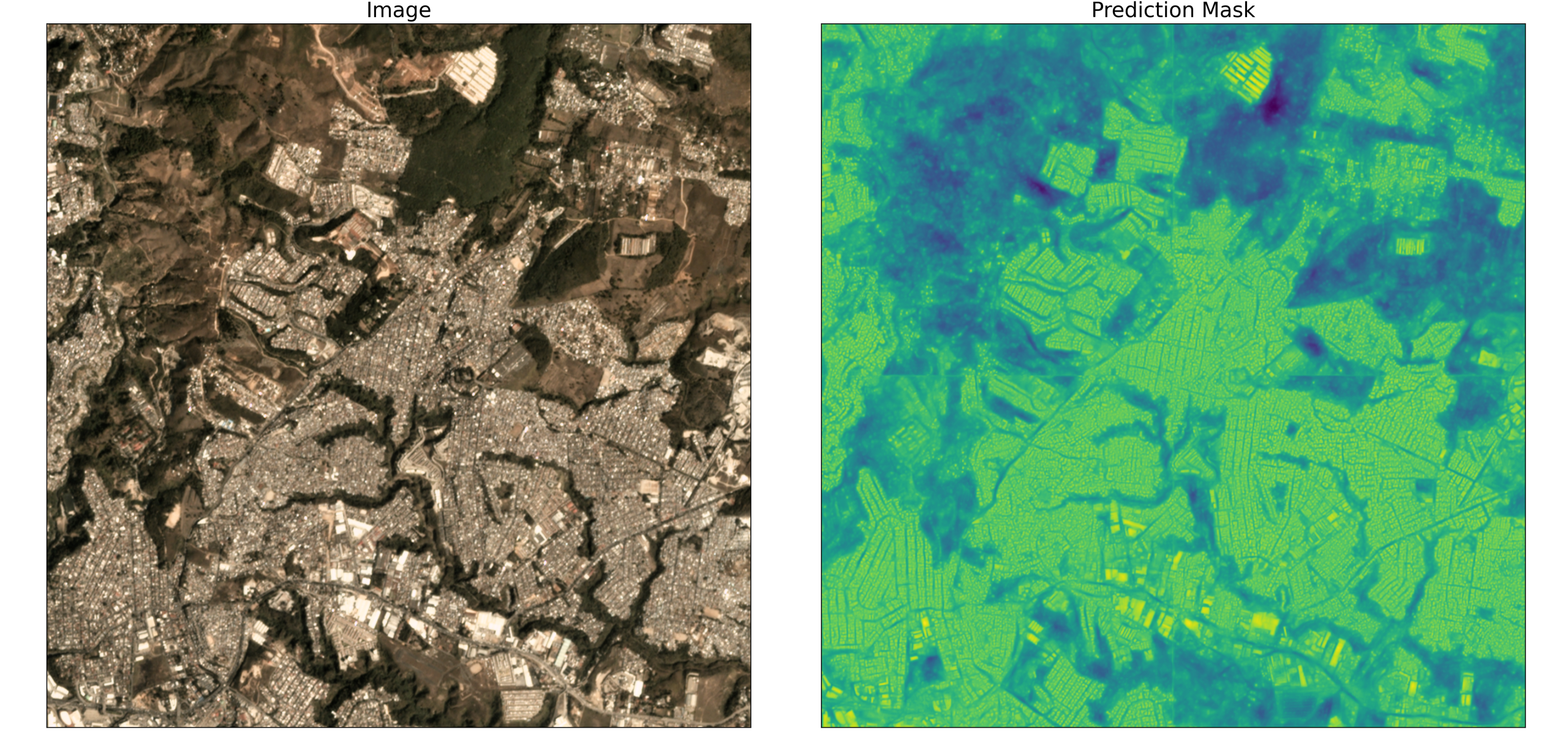

For the SpaceNet 7 test_public dataset, the script will execute in ~2.5 minutes on a p3.2xlarge AWS instance (which equates to an inference rate of ~60 square kilometers per second). The output of the segmentation model is a prediction mask of building footprints (see Figures 3, 4), and performance is surprisingly good given the moderate resolution of the imagery.

6. Footprint Extraction

Building footprint geometries can be quickly extracted the from the prediction mask with Solaris:

geoms = sol.vector.mask.mask_to_poly_geojson(mask_image,

min_area=min_area, output_path=output_path_pred,

output_type=’geojson’, bg_threshold=bg_threshold)

7. Building Identifier Tracking

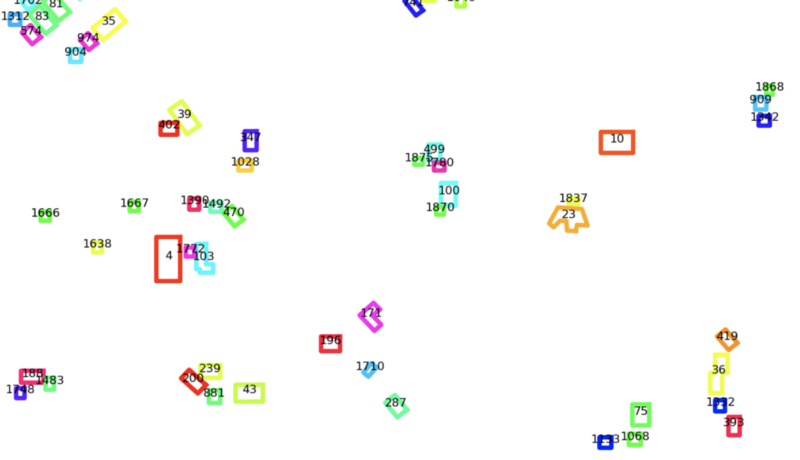

Connecting building identifiers between time steps is the trickiest stage of the baseline algorithm. The inclusion of Unusable Data Masks (UDMs) in the SpaceNet 7 labels is important from a dataset completeness perspective, but complicates building identifier tracking. This is because building labels at times behave like subatomic particles, popping into and out of existence seemingly at random depending on cloud cover. This is illustrated in Figure 6.

Despite these complications, one can extract persistent building identifiers using the following function, which tracks footprint identifiers across the deep time stack:

track_footprint_identifiers(json_dir, out_dir, min_iou=min_iou,

iou_field=’iou_score’, id_field=’Id’)

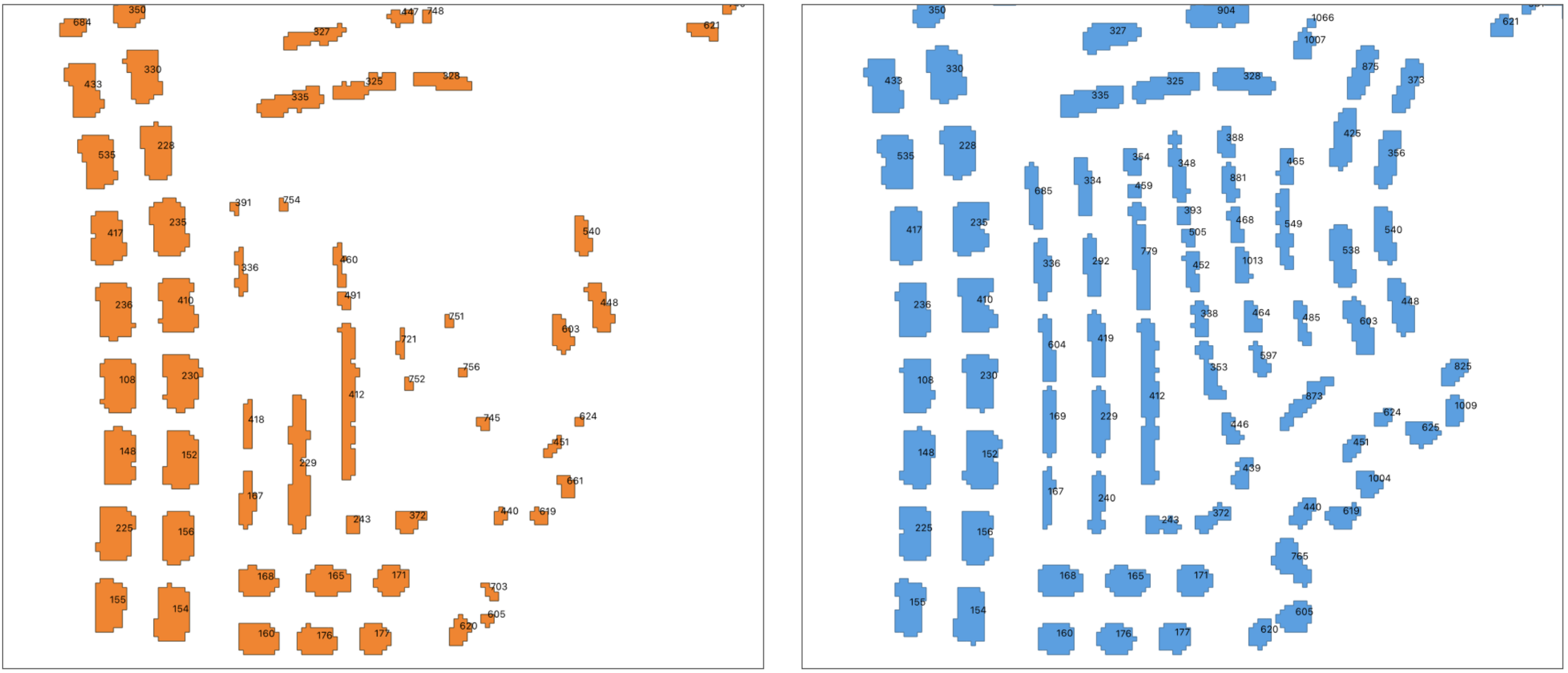

While this footprint matching module is of course imperfect, in many cases it works quite well (see Figure 7).

8. Scoring

Scoring with the legacy SpaceNet metric of an IOU-based F1 provides an aggregate score of F1 = 0.42. Previous SpaceNets had far higher scores (e.g. F1 > 0.8 at nadir in SpaceNet 4), and used a higher IOU threshold of 0.5 (vs 0.25 for SpaceNet 7 due to the very small building pixel sizes), yet the imagery was also ~10✕ sharper. On the balance, the baseline F1 score is still higher than we anticipated.

Yet, this F1 score does not capture the dynamic nature of the SpaceNet 7 dataset, and therefore the SCOT metric is far more informative. More details on that metric are available in a previous blog, but in brief: the SpaceNet Change and Object Tracking (SCOT) metric combines an object tracking and change term into a singular score. For SpaceNet 7, we find the change term (mean score of 0.06) to be far more challenging than the tracking term (mean score of 0.39). In the aggregate, scoring each of the 20 areas of interest in the SpaceNet test_public dataset gives a combined score of SCOT = 0.158 (out of a possible 1.0).

9. Conclusions

While the SpaceNet 7 task is a very challenging one, and we are happy with this score as an initial baseline, there is certainly much room for improvement. See the CosmiQ_SN7_Baseline GitHub repository for further details on the algorithmic baseline and how to run through the entire algorithm from start to finish.

The ability to localize and track the change in building footprints over time is fundamental to myriad applications, from disaster preparedness and response to urban planning. The SpaceNet 7 baseline model discussed in this blog should provide a good starting point to further investigations in this challenging task. With the upcoming launch of SpaceNet 7 (now slated for September 8, 2020), we look forward to seeing what improvements can be made to this baseline.

* Thanks to Nick Weir and Daniel Hogan for assistance designing the algorithmic baseline.

* 2020 — Aug — 28 Update: This blog was updated to include more details on scoring.