The SpaceNet Change and Object Tracking (SCOT) Metric

Preface: SpaceNet LLC is a nonprofit organization dedicated to accelerating open source, artificial intelligence applied research for geospatial applications, specifically foundational mapping (i.e. building footprint and road network detection). SpaceNet is solely managed by co-founder, In-Q-Tel CosmiQ Works, in collaboration with co-founder and co-chair, Maxar Technologies, and the other partners: Amazon Web Services (AWS), Capella Space, Topcoder, Institute of Electrical and Electronics Engineers (IEEE) Geoscience and Remote Sensing Society (GRSS), the National Geospatial-Intelligence Agency (NGA) and Planet.

The SpaceNet 7 Multi-Temporal Urban Development Challenge has the ambitious goal of tracking precise building addresses and urban change from satellite imagery. As detailed in our announcement blog, the goal of SpaceNet 7 is relevant to numerous human development and disaster response applications. Furthermore, the unique SpaceNet 7 dataset poses a challenge from a computer vision standpoint because of the small pixel area of each object, the high object density within images, and the dramatic image-to-image difference compared to frame-to-frame variation in video object tracking.

How exactly one should measure performance for the SpaceNet 7 task is a tricky question given the multiple dimensions of the dataset. For one, each building footprint is assigned a unique identifier (i.e. address), which we would like to track over time. Secondly, there is significant construction activity in the SpaceNet 7 data cubes, with new buildings appearing (or disappearing) throughout the time series. Ideally, we would like to quantify the ability of machine learning algorithms to correctly predict when and where the change takes place. These two competing priorities (tracking and change) are not fully captured in existing metrics, and lead us to develop a new metric: the SpaceNet Change and Object Tracking (SCOT) metric. This blog details the advantages and specifics of this new metric that will be used to score the impending SpaceNet 7 challenge.

1. The SCOT Metric

For SpaceNet 7, the ground truth and the model-generated proposals both consist of a set of building footprints for each month. Each footprint is assigned an ID number, with the idea being that each reappearance of the same ID in subsequent months corresponds to a new observation of the same building. Measuring the concordance between the ground truth and proposals is the job for an evaluation metric.

We originally intended to use the Multiple Object Tracking Accuracy (MOTA) metric for SpaceNet 7, which is a commonly used metric for object tracking in video. Yet this metric is not a good choice for difficult sequences, since errors are compounded additively (rather than being “averaged” out via a harmonic mean with the historical SpaceNet metric). The end result is that for difficult scenes with few true positives and many false positives, it is possible to achieve a negative MOTA score. Since we are tackling a very hard problem in SpaceNet 7, MOTA is therefore a poor choice. In fact, all existing metrics that we investigated unfortunately proved to be a poor fit for our dataset and challenge task, leading us to develop our own metric.

The SpaceNet Change and Object Tracking (SCOT) metric combines two terms: a tracking term and a change detection term. The tracking term evaluates how often the proposal correctly tracks the same buildings from month to month with consistent ID numbers. In other words, it measures the model’s ability to characterize what stays the same as time goes by. The change detection term evaluates how often the proposal correctly picks up on the construction of new buildings. In other words, it measures the model’s ability to characterize what changes as time goes by.

1.1 Matching Footprints

For both terms (tracking and change detection) in the SCOT metric, we start by finding “matches” between ground truth and proposal footprints for each month, just like in the original SpaceNet Metric used for previous SpaceNet building footprint challenges. To be matched, a ground truth footprint and a proposal footprint must have an intersection-over-union (IOU) exceeding a given threshold. In previous challenges, a threshold of 0.5 was used, but for SpaceNet 7 we relax the threshold to 0.25 due to the extra difficulty of interpreting lower-resolution imagery. Using a threshold below 0.5 makes it possible for more than one proposal footprint to qualify to be matched to the same ground truth footprint, or vice versa. To resolve that ambiguity, the SCOT metric introduces an optimized way of selecting matches when such choices arise. The metric uses the set of matches that minimizes the number of unmatched ground truth footprints plus the number of unmatched proposal footprints. If there’s more than one way to achieve that goal, then as a tiebreaker the set of matches with the highest sum of IOUs is selected. This is an example of a long-studied problem in combinatorics (the “unbalanced linear assignment problem”), and an algorithmic solution is available in SciPy and other places.

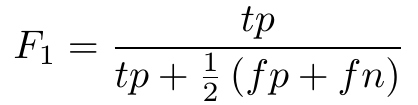

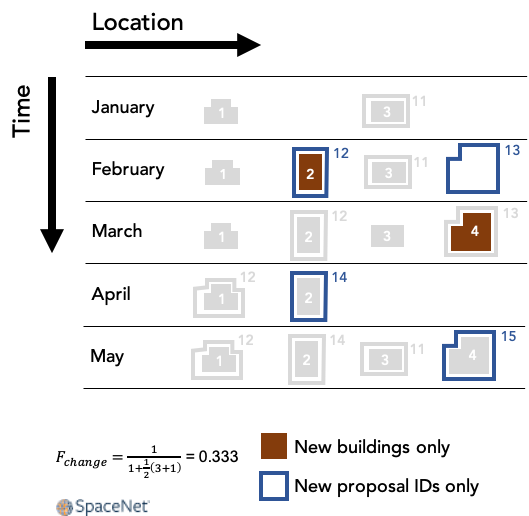

For the original SpaceNet Metric, just one step remains after finding the matches. That’s calculating the F1 score, treating every match as a true positive:

The SCOT metric consists of two terms (tracking and change detection) that are both F1 scores that follow this same general procedure, but with one small (though significant) tweak in each case.

1.2 The Tracking Term

To measure how well a proposed set of footprints tracks IDs from month to month, the tracking term calculation uses the same formula as above but applies a more stringent definition of what counts as a true positive.

A match between a ground truth footprint and a proposal footprint is considered a “mismatch” if the ground truth footprint was most recently matched with a different proposal footprint ID or if the proposal footprint was most recently matched with a different ground truth ID. (This is inspired by, but slightly different from, the MOTA definition of a mismatch.) When calculating the F1 score for tracking, only those matches that are not mismatches count as true positives. If a ground truth footprint is matched to a proposal footprint but it’s a mismatch, then the ground truth footprint is considered a false negative and the proposal footprint is considered a false positive, the same outcome as if they’d never been matched at all. The result is an F1 score that penalizes even correctly-located proposal footprints if their ID numbers are not consistent across time.

Figure 1 shows a made-up example of how this works. In this example, a small area with four buildings (ground truth IDs 1–4) is imaged each month for five months. The outlines show proposal footprints, labeled by their ID numbers. Buildings 2 and 4 are newly constructed during this time, and buildings 3 and 4 temporarily disappear in April, which can happen when an image is partially occluded by clouds. The model in this example does a good job of generating proposal footprints that are matched with ground truth building footprints, but it makes some mistakes with the proposals’ ID numbers, causing some of the matches to be mismatches. Notice that in April, proposal 12 shifts from being matched with building 2 to being matched with building 1. That leads to two mismatches in April: The match of building 1 and proposal 12 is a mismatch because proposal 12 had been most recently matched with a different building, while the match of building 2 and proposal 14 is a mismatch because building 2 had been most recently matched with a different proposal. On the other hand, the match of building 3 and proposal 11 in May is not a mismatch, because the last time this building and proposal were matched with anything — even though it was several months earlier — it was with each other.

What all these details have in common is that proposals that stay on target with the same building are not mismatches, while ID mistakes count as mismatches during the specific months when those mistakes occur, lowering the value of the tracking term.

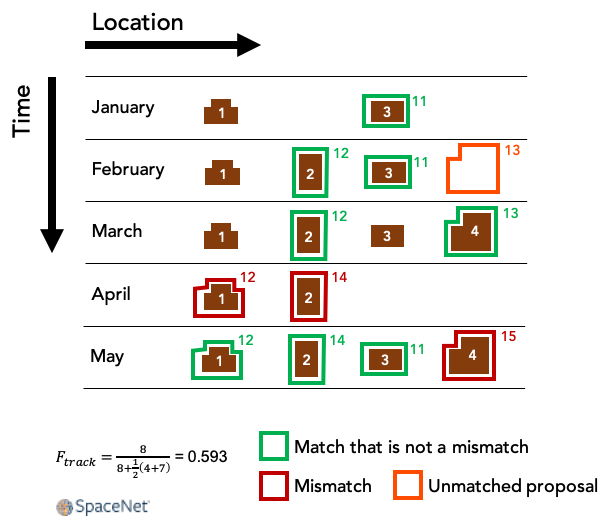

1.3 The Change Detection Term

Like the tracking term, the change detection term is an F1 score that’s similar to the SpaceNet Metric with one small modification. This time, we don’t worry about mismatches. Instead, once the matching is complete, we simply ignore every building and proposal that isn’t making its chronological first appearance. That amounts to dropping any footprint (ground truth or proposal) with an ID number that has appeared in any previous month. Rather than measuring performance on all buildings, the result is an F1 score concerned only with new buildings (i.e., changes), requiring the model not only to find them but to identify them as such.

Figure 2 shows how this works for the same made-up example considered above. There are two appearances of new buildings: building 2 in February and building 4 in March. (Buildings already present in the first month of data don’t count.) Building 2’s February arrival is matched with proposal 12, which is also making its first appearance, so that counts as a true positive for the change detection term. Building 4’s arrival in March is matched with proposal 13, but that proposal ID already appeared in February so its subsequent appearance in March is ignored. Building 4’s arrival is therefore a false negative.

To summarize the change detection term, only new footprints are used for the purpose of calculating the F1 score. One important property of this term is that a set of static proposals that do not vary from one month to another will receive a change detection score of 0, even for a data cube with very little new construction.

1.4 Putting It All Together

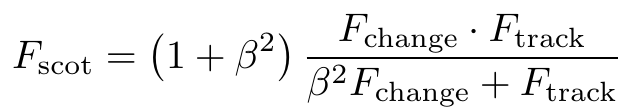

To combine the tracking term with the change detection term, a weighted harmonic mean of these two F1 scores is used:

This makes it possible to tune the relative weight of tracking and change detection by setting a value for β. For SpaceNet 7 we use a value of β=2, which emphasizes the tracking term.

In SpaceNet 7 we have multiple locations (a.k.a. areas of interest), and so the aggregate reported score is a simple mean of each unique location’s SCOT score.

2. Conclusions

The uniqueness of the SpaceNet 7 dataset (namely: dynamic objects and geolocated addresses) demand careful consideration of an appropriate metric for the Multi-Temporal Urban Development Challenge. Existing metrics (e.g. MOTA) are not adequate for our proposed task of tracking uniquely identified building footprints through a dynamic time series. Accordingly, we created the SpaceNet Change and Object Tracking (SCOT) metric that combines an object tracking and change term into a singular score. One benefit of this metric is its continuity with past SpaceNet challenges: the SCOT tracking term reduces to the original SpaceNet Metric when evaluated at a single time step. Another benefit of the metric is explicitly quantifying both change detection, as well as object tracking performance. An upcoming post will provide further details on the implementation of SCOT, including the complete code, via the scoring of the SpaceNet 7 baseline algorithm.