library

This image was AI Generated using Chat GPT. Image of AI building malicious code that threatens security. July 28, 2025.

What if everyone could code? Imagine your next-door neighbor with no coding experience asking an AI chatbot in plain language to build a functional website or app for his small business. This positive use case shows how AI can be a great enabler, but it can also introduce new risks. The example of your inexperienced neighbor using natural language and AI tools to generate code is often referred to as “vibe coding.” While this allows individuals to generate code without any formal training, it also opens the aperture for a myriad of vulnerabilities that exist both within the generated code and in the broader ecosystem in which this code is executed.

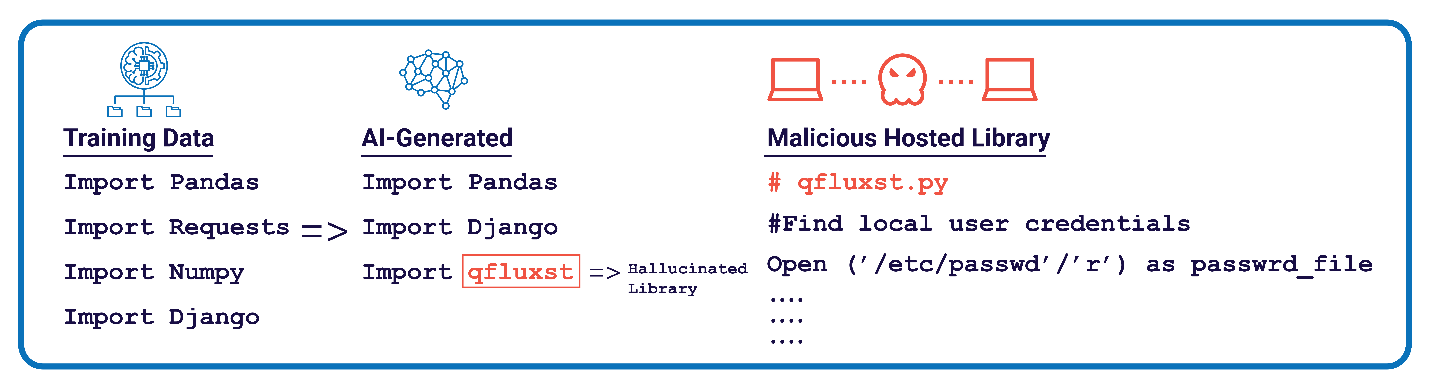

In the scenario we’re describing, one area of particular concern is the exploitation of a core tenet of code development. At the beginning of almost every computer program is an “import” or “include” statement that uses third-party code snippets and libraries of functions that perform common tasks, such as parsing HTML from a given URL. Rather than a developer writing these functions themselves, they import it from a public repository that contains code to be used and reused for common tasks. AI code generation models are trained on code examples that invoke these public repositories, so it automatically builds in these import statements when it is prompted to generate code.

But what if the AI models hallucinate the public library or repository names while generating the code? These models are trying to mimic their training set by including an import statement, but rather than linking to a legitimate library, they link to a non-existent one, similar to AI models misspelling words in generated art or pictures. Malicious actors could then host their own malicious libraries with the same name as the hallucinated library and have their malicious code automatically imported to these AI-generated code samples (see Figure 1).

This is just one example of how “vibe coding” might increase the number of vulnerabilities incorporated in AI-generated code. These vulnerabilities add a new attack vector to an already porous security posture in the software supply chain. Software security teams trying to tackle package confusion and typo-squatting attacks now must be even more vigilant for vulnerabilities caused by AI code generation because of the risk of malicious code being inserted into otherwise trusted programs.

To be clear, there are certainly many benefits to AI code generation. The technology democratizes coding by allowing less technical users to develop code and projects without explicit training. It also moves to increase the speed of the software development cycle. However, vibe coding may present costly downstream effects when deployed at scale. If used across an enterprise without checks, vibe coding can accelerate technical debt — the cost of future rework — with code that is difficult to debug and troubleshoot, creating performance and quality assurance issues over time in addition to the potential security issues described above. If integrating this technology seems daunting, even with security solutions around it, organizations could consider testing it first with senior technical employees to build experimental products that are not mission critical.

Since AI and the advancements it enables are not only here to stay but represent a critical opportunity to build a strategic advantage for the U.S., IQT is working to address these security gaps and de-risk the onboarding of these technologies within technology stacks so that users can realize the benefits of scale with minimal risks. AI code generation tools can address these concerns by creating specific guardrails or requiring a human quality control review before the code is deployed. Third-party companies like Chainguard, Socket, Hunted Labs, and others can also help address these problems by limiting the scope of imported code, vetting code during compilation, and controlling the execution of code. Tools like Antithesis promise to de-risk code development by implementing robust automated testing, and AI test and evaluation companies like Dreadnode, Robust Intelligence, and Protect AI aim to further de-risk AI model invocations within an IT boundary.

Organizations may view AI code generation as a magic bullet to address staffing challenges and scaling limitations that are inherent in the modern software development world, but security teams need to be aware of these threats and potential mitigations as novel development tools emerge. IQT will continue to invest in this space to improve the security and efficacy of these tools that will ultimately enable a critical and game changing strategic advantage, if implemented with security at the forefront.