Introduction

The future of work is creating a new golden age of upskilling, where anyone, anywhere, at any time can improve on anything. The internet has reduced the physical constraints of time and space, enabling anyone with a stable internet connection to take online classes asynchronously and from the comfort of their homes. Artificial Intelligence can accurately assess one’s skills and deficiencies and recommend materials for self-improvement. The massive migration to the internet wrought by COVID-19 has also accelerated the demand for online upskilling tools.

The flip side to this is that today’s advanced technology may not only democratize upskilling, but also require it.In 2017, the McKinsey Global Institute estimated that as many as 375 million workers would need to upskill by 2030 due to automation. Indeed, demand for manual skills in repeatable tasks is expected to decline by 30%, but the demand for technological skills is expected to rise by more than 50%, and the demand for complex cognitive, social, and emotional skills by about 30%. Furthermore, 87% of executives said they were experiencing skill gaps, and almost one-third thought their current HR infrastructure would not be able to execute new strategies to address these gaps. This dearth of training is alarming, as it is pushing employees to leave their employers. For example, in a recent global survey of office workers by UiPath, 88% said they’d be more willing to continue working at a company if it offered upskilling opportunities. As a result, we’ve seen companies like Amazon promise to upskill substantial amounts of their workforce.

In short, the digital age provides unparalleled opportunities for individuals to fulfill their desire for self-improvement, but advanced technologies will require individuals and enterprises alike to capitalize on those opportunities if they want to succeed in the future of work.

The upskilling value chain

Upskilling is the process by which an individual acquires new skills. Workers upskill either through formal education or work experience. In order to keep up with rapid technological change, people need to continuously upskill.

A value chain is the series of steps that add value to a particular product or service. In the context of upskilling, the value chain primarily consists of three parts, which correspond temporally to a typical “user journey”:

- Assess: At the outset, an individual needs to determine what skills she has, what her deficiencies are, and what her plan should be in upskilling. Similarly, an employer needs to assess the competency of the employee to decide how best to upskill the employee.

- Learn: Once an individual has determined her plan for upskilling, she actually needs to learn and train in that skill. Companies often bundle learning with assessment.

- Credential: After an individual has mastered a skill, she needs to signal to employers, co-workers, or customers that she now has that skill.

In the analog world, the credentialing step extracted the lion’s share of value from the value chain. Indeed, because assessments (e.g., via interviews, tests, etc.) provided only a low-fidelity, grainy picture into an individual’s true skills, employers relied on credentials as a market signal, a proxy for an individual’s capacity to learn and succeed on the job. For example, many employers have historically relied on degrees as a quick-look, blunt proxy of one’s skills, and school rankings as the same for one’s proficiency in those skills. Over time, thanks to the strength of the credential, these schools accumulated institutional prestige. They became gatekeepers, forcing students to chase the credential and employers to make decisions based on the credential. It was a great place in the value chain to be!

Today, technology is shifting the plane of competition and upending the value chain by placing a far higher emphasis on assessments. Advances in AI greatly increase the efficacy of skills assessment tests, producing a more granular, accurate picture of an individual’s strengths and weaknesses. As a result, individuals are more able to chart a path forward to upskill themselves, and employers are more able to deploy their workforce towards jobs that best utilize their employees. People no longer need to depend so heavily on credentialing mechanisms to understand others’ skills and proficiencies. (To be fair, however, under disruption theory, the incumbent credentialing providers will continue to exist, at least in the short term. Their prestige has ossified over the years. They may be able to protect their position by moving “upstream” and attempting to accumulate even more prestige to appeal to other prestige-seekers.)

The internet has also changed the learning portion of the value chain by democratizing access. While local school and learning opportunities enjoyed geographic lock-in, the internet now enables students to access a cornucopia of content online, blowing geography wide open. However, another problem has emerged: How can individuals navigate this new world of online content to find the most relevant material to the specific individual’s upskilling needs? Again, this problem brings us back to the importance of assessments. Just as YouTube organized a vast catalog of online videos to deliver the content most relevant to the user’s search and desires, assessment tests hold the potential to recommend content specifically tailored to the user’s deficiencies.

AI and the internet are disruptive technologies that will greatly increase the importance of assessments in the upskilling value chain. But within the realm of assessments, how do we characterize the startups in the space? Below, we provide a framework for thinking about the future of skills assessment companies.

A framework for assessments

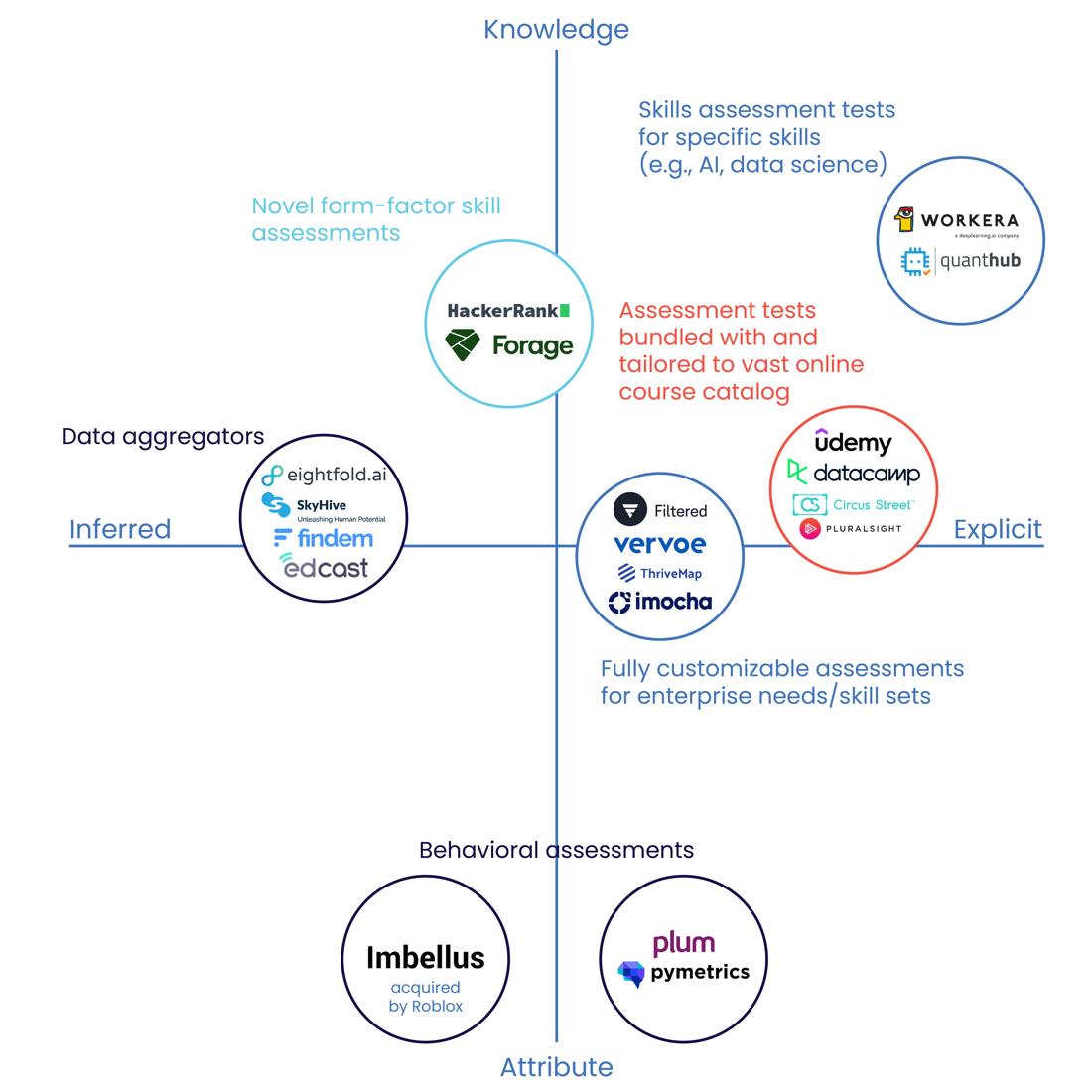

Within upskilling assessments, we believe startups are divided along two axes:

- Services that assess users on their knowledge vs. their attributes, and

- Explicit vs. inferred assessments.

Importantly, these axes are fluid (and admittedly sometimes fuzzy) sliding scales rather than discrete buckets.

First, we define knowledge as an individual’s learned, more fluid skills while we define attributes as an individual’s innate behavioral traits and attitudes. The Meyers-Briggs Type Indicator, for instance, is an attribute assessment that tests, among other things, whether an individual is naturally introverted or extroverted. Introversion and extroversion are attributes because they are certain organic predispositions that are intrinsic to a particular individual. While an introvert can build a muscle of extroversion, that individual at the end of the day is still an introvert through-and-through. Her introversion is a rubber band that can be stretched to a certain extent but returns to an equilibrium state of comfort when left alone. On the other hand, a skill such as mathematics is more akin to a mound of knowledge that grows over time and has no theoretical upper bound in size. While knowledge can also admittedly shrink, this shrinkage is less a function of an innate, reflexive behavioral trait than it is of prolonged individual neglect.

Second, we differentiate between explicit and implicit signals according to two sub-factors: (1) the methodology and (2) the intent of the evaluation. To begin, methodology is the form that the assessment takes. Explicit methodologies assess an individual’s competency in a skill via a set of questions targeted and tailored to that particular skill (e.g., multiple choice). These types of questions provide direct signals into an individual’s competencies. Meanwhile, other companies infer skills based on user behavior in less direct assessment methodologies such as games or competitions.

With respect to intent, an assessment with explicit intent is clearly administered as a test to determine one’s strengths and weaknesses. When taking these assessments, users know full-well that their performance will be measured for a specific upskilling purpose such as finding a job. An assessment with explicit intent is a discrete event—it is easy to tell where the assessment begins and ends. On the other hand, assessments with implicit intent build a mosaic of an individual’s skills based on a hodgepodge of past and current user behavior. These assessments cobble signals together from, for instance, an individual’s previous work history, personal website, or open-source contributions. Here, the person being assessed typically doesn’t know whether or which of their past behaviors are being pulled in to derive insights on their strengths and weaknesses. Importantly, some assessment services will straddle the line between explicit and implicit. For example, games assessments use an implicit methodology but are explicitly intended to assess the skills of the individual playing the game.

Below, we map the startups in the upskilling assessments space according to this framework and provide our own thoughts on what types of assessments excite us:

Explicit skills assessments for knowledge. These companies use proprietary, adaptive testing algorithms to assess an individual’s strengths and weaknesses in a particular knowledge skill. Based on an individual’s results, the company will then formulate a tailored learning plan for the individual. For example, Workera has developed an AI and machine learning skills assessment that then recommends specific learning modules on external platforms such as Coursera and Udacity.

Behavioral assessments. Companies like Plum and Pymetrics use proprietary psychometric tests to evaluate behavioral attributes. Similarly, Imbellus (acquired in late 2020 by Roblox) uses games to infer behavioral skills. Importantly, in the short run, we predict that explicit assessments for hard, technical skills will have higher adoption than will explicit assessments for “softer” skills (e.g., risk appetite, generosity) because the former is more measurable (and therefore more empirical) in its efficacy.

Assessments bundled with and tailored to online course catalogs. At their core, companies like Udemy and PluralSight are learning platforms that serve course material directly to users, but these companies also offer assessments tangentially to help individuals choose which of the platform’s courses to take. In other words, these companies integrate learning and assessments in the upskilling value chain. One main difference between these companies and assessment-only companies is that the latter are more willing to route you to any number of external learning platforms to best serve your upskilling needs while the former tries to keep you on the platform.

Fully customizable assessments. Some upskilling companies enable businesses to create their own customizable tests based on their assessment needs, whether for knowledge or behavioral skills. While these companies provide businesses with a high degree of flexibility, their biggest challenge will be proving efficacy. While certain customizable assessments may find success for businesses with a certain bundle of upskilling needs, does this success transfer broadly to businesses with other needs?

Data aggregators. These companies pull and piece together data from disparate sources to assess an individual’s skills. Notably, some platforms are more focused on knowledge than behavior (e.g., EightFold focuses on AI skills in particular). Unlike online course platforms, data aggregator platforms assess skills through implied signals. For example, EdCast’s SkillsDNA engine builds skills profiles from employee self-assessments, metadata-extraction from resumes and references, and time spent watching learning materials. We believe that these implicit assessments alone will provide a lower-fidelity picture into strengths and weaknesses than will explicit assessments alone. However, the two types of assessments are complementary, and we believe that a combination of both will best serve assessment needs.

Novel form-factor assessments. Finally, some companies provide assessments in novel form factors. HackerRank, for example, specializes vertically in coding assessments. While some of these assessments are explicit multiple-choice questions, others are world-wide coding competitions, which we characterize as more of an implicit signal of strengths and weaknesses. Forage creates custom work programs to enable businesses to evaluate an individual’s skills. To reach market adoption, these companies will need to prove out the efficacy of their assessment methodologies.

Conclusion

While startups are taking vastly divergent approaches to acquire market share in the assessments space, our framework has important predictive power and can help users, founders, enterprises, and venture capitalists alike understand the burgeoning entrepreneurial landscape. First, the knowledge vs. attributes axis must be concomitant with a company’s sales strategy and customer profile. For instance, we should expect startups offering knowledge assessments to sell to enterprises requiring engineering skills. On the other hand, we should expect startups offering attribute assessments to sell primarily to management consulting firms or other industries that more heavily prioritize relations. As we hinted in the introduction, demand for both types of skills will increase in the years to come, but different industries will prioritize different skillsets. Second, the inferred vs. explicit axis can be helpful proxies for thinking about the accuracy of the assessment. While all assessment companies will need to prove the efficacy of their assessments, some industries may be more trusting of (or suited to) one type of assessment over another. We believe that explicit assessments for knowledge skills will bring the most value because these assessments capture more direct signals into an individual’s competency in an empirical, measurable skill. That said, these assessments will need to be combined with other assessments to paint a holistic picture of an individual’s upskilling needs.

What will the future hold for assessments? As technology continues to evolve, assessments will surely transform along with it. For instance, if behavioral data across an individual’s lifetime is continually aggregated and analyzed into a sort of learning passport, perhaps we’ll see one-time assessments disappear as a thing of the past. Alternatively, brain-computer interfaces or neural implants may be able to assess skills down to a pinpoint level of accuracy without any need for a real-world assessment. However, regardless of what form assessments take, the need for assessments will remain as we continually reinvent and improve ourselves for the future of work.