Introducing the Solaris Multimodal Preprocessing Library

Within the latest release of Solaris, available now, is a new resource for geospatial deep learning: the Solaris Multimodal Preprocessing Library. This library eases the work of imagery preprocessing, i.e., undertaking data cleaning and image analysis to get the data to a final form for deep learning. It does so by providing building blocks for constructing powerful image-processing workflows.

Sometimes building a dataset is as simple as tiling ready-to-go images and their accompanying vector labels, a task for which Solaris’s tile subpackage is ably suited. But sometimes the imagery itself must also be modified in more substantial ways. This is especially true for multimodal datasets, where tasks like applying masks from one modality to another are the norm. But it’s also true anytime one wants to process imagery with techniques like pansharpening, noise reduction, etc. The analysis of synthetic aperture radar (SAR), in particular, is replete with image processing algorithms that are needed to convert complex SAR data to more readily-interpretable imagery.

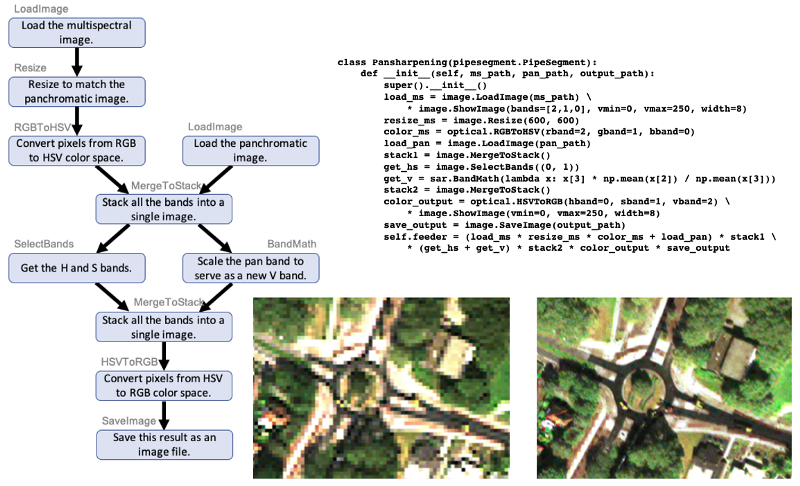

To support workflows like those, Solaris’s new preproc subpackage contains a library of more than 60 Python classes, each of which does a distinct data manipulation task. Although these classes may be useful individually, their real value is that they can easily be combined into user-defined classes to execute image-processing workflows of arbitrary complexity.

Suppose that a user has in mind a flowchart describing some preprocessing workflow. To turn that idea into a class, the first step is to instantiate an object for each task in the flowchart. Many typical tasks (e.g., load an image, apply a mask, do a calculation with the image’s bands) are available among the 60+ options already in the library. The second step is to specify how the inputs and outputs of all those tasks are connected to each other. This can be done in a single line of code using a concise notation. (Compared to similar programs that require the user to draw a flowchart in a GUI or re-type the task names every time their output is used, this approach is much less tedious.)

The reason this works is that the classes in the preprocessing library all inherit from a base class, called PipeSegment, that handles much of the functionality in the background. For example, the user does not need to keep track of hard disk file paths for all the intermediate results — intermediate results are instead stored in RAM and deleted as soon as they’re no longer needed. The user doesn’t even need to figure out the order in which to do the calculations; that’s also handled automatically. In this functional programming approach, the user’s only job is to clarify exactly what data processing should be done. Figuring out the implementation details is a job for the computer.

In addition to the release of the code itself, a three-part tutorial has been added to the Solaris tutorials page. Part 1 of the tutorial shows how to create a simple image-processing pipeline, using the real-world example of a normalized difference vegetation index (NDVI). Part 2 shows how to handle more complicated workflows where the data flow branches apart and recombines. This is shown using the example of a pansharpening algorithm. Finally, Part 3 shows how to use the preprocessing library to work with SAR. The flexibility of the Solaris Multimodal Preprocessing Library makes it an effective tool for use cases like these and many others.