1. Executive Summary

With increased availability of overhead imagery, machine learning is becoming a critical tool for analyzing this imagery. Advanced computer vision object detection techniques have demonstrated great success in identifying objects of interest such as ships, automobiles, and aircraft from satellite and drone imagery. Yet relying on computer vision opens significant vulnerabilities, namely, the susceptibility of object detection algorithms to adversarial attacks.

In this post we explore preliminary work on the efficacy and drawbacks of adversarial camouflage in the overhead imagery context. In brief, while adversarial patches may fool object detectors, the presence of such patches is easily uncovered, which raises the question of whether such patches truly constitute camouflage.

2. Existing Attacks

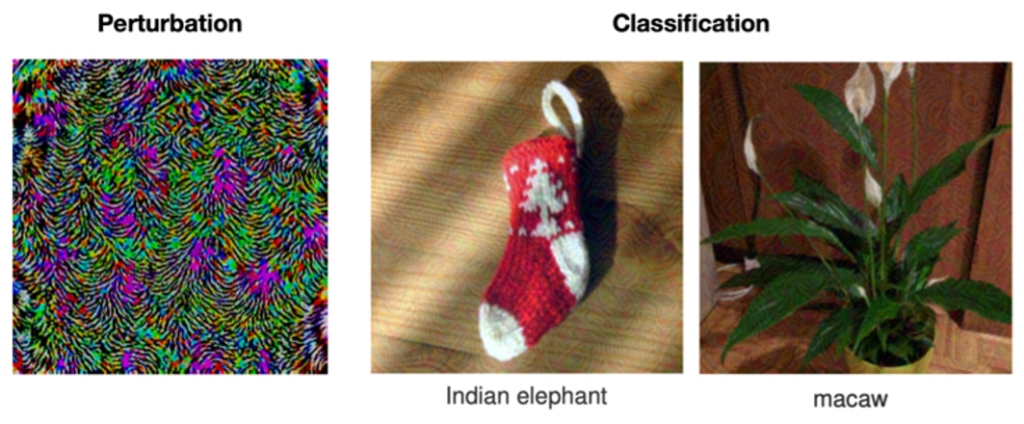

Computer vision algorithms are known to be susceptible to perturbations. A 2017 study summarized its findings like this: “Given a state-of-the-art deep neural network classifier, we show the existence of a universal (image-agnostic) and very small perturbation vector that causes natural images to be misclassified with high probability [1].” Most research has focused on simple image classification, as in Figure 1.

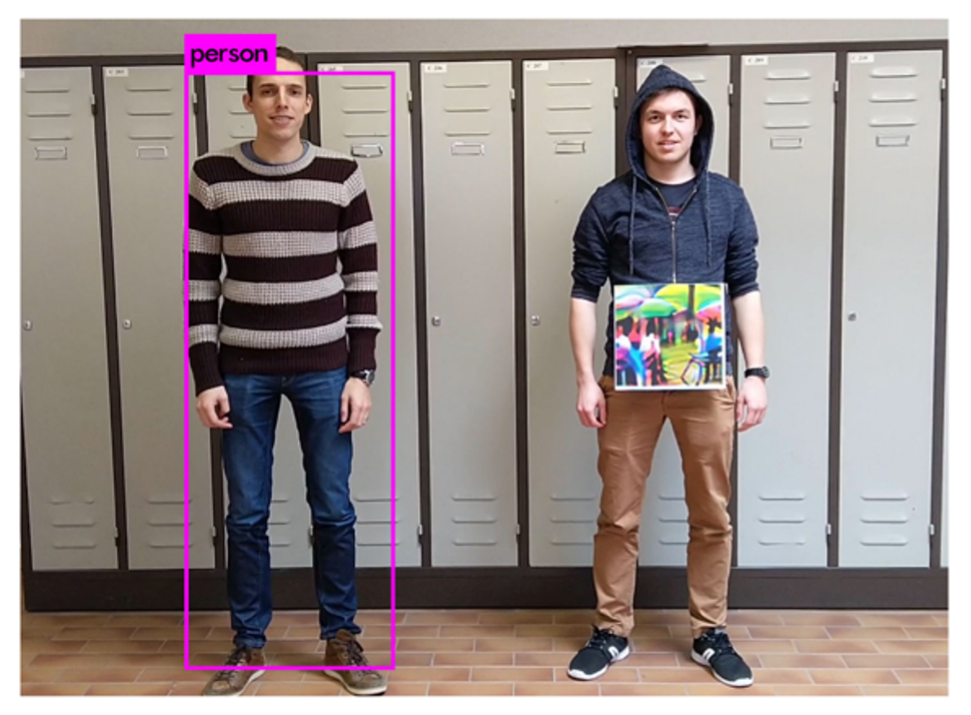

There is also an increasing body of work around fooling advanced object detection systems (i.e. YOLO SSD, Faster R-CNN). Notably, impressive results have been achieved in evading person detectors in the real world [2], [3], as shown in Figure 2.

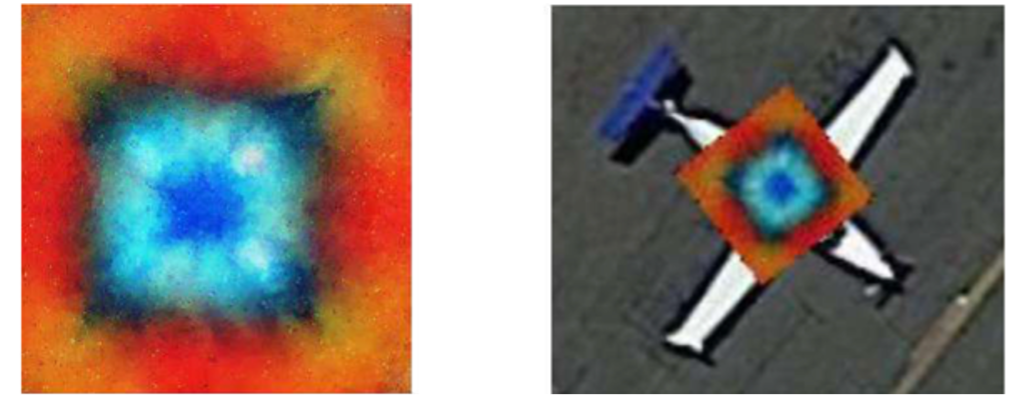

Of greatest relevance to this work is a recent paper entitled “Adversarial Patch Camouflage against Aerial Detection” [4], which demonstrated the ability to fool aircraft detectors with simulated patches (see Figure 3). While these simulated patches may be quite effective in fooling aircraft detectors, they have explored the detectability of the patches themselves.

3. Patch Counterintelligence

There is much to explore in this adversarial camouflage space, but we will start with a simple counterintelligence example. In Section 2 we discussed prior work on effectively obfuscating objects with carefully designed patches. We ask a simple question: can the presence of adversarial patches be detected?

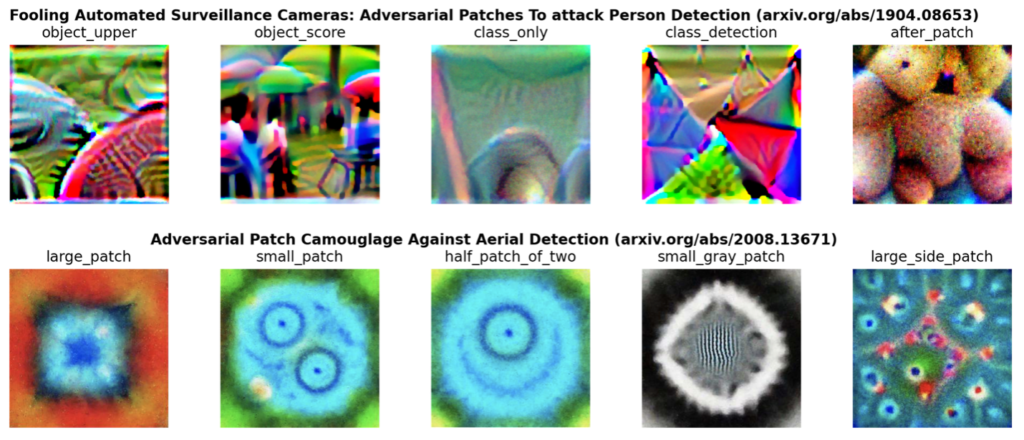

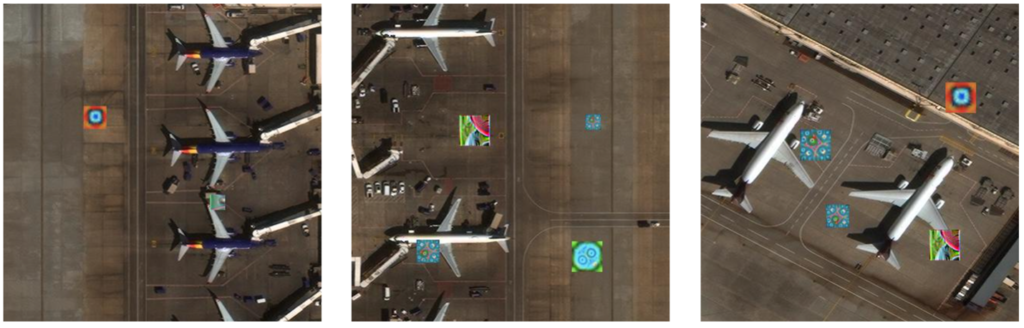

To address this issue, we leverage the RarePlanes dataset and overlay a selection of existing adversarial patches on satellite imagery patches. See Figure 4 for the patches utilized.

We overlay the 10 patches of Figure 4 on 1667 image patches from the RarePlanes dataset, with 20% of these images reserved for validation. The size and placement of each patch is randomized to increase the robustness of the detector. See Figure 5 for a sample of the training imagery.

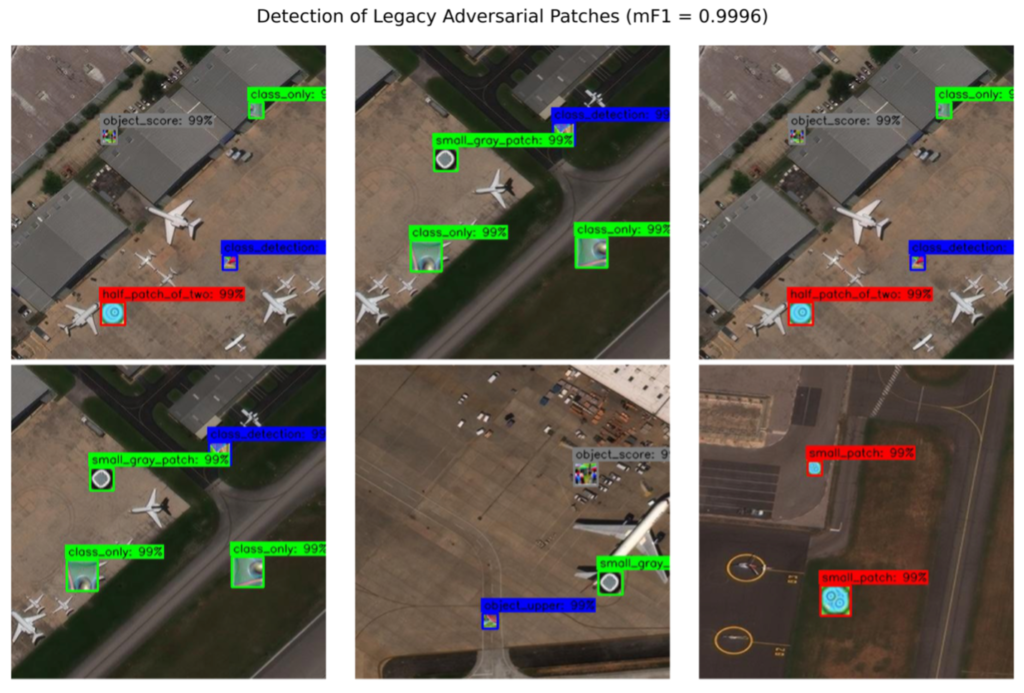

We train a 10-class YOLTv4 adversarial patch detection model (YOLTv4 is built atop YOLO and designed for overhead imagery analysis) for 20 epochs. We evaluate our trained model on a separate test set with 1029 images, and score with the F1 metric that penalizes both false positives and false negatives. The model yields an astonishing aggregate F1 = 0.999 for detection of the adversarial patches, with all 10 patches easily identified. We show a detection example in Figure 6.

4. Conclusions

In this post we reviewed two existing academic research on fooling state of the art object detection frameworks with adversarial patches. While such patches may indeed fool object detectors, we found that the presence of these patches is easy to detect. Overlaying a sample of 10 adversarial patches from recent academic papers on the RarePlanes imagery, we trained a YOLTv4 model to detect these patches with near-perfect performance (F1 = 0.999). If one can easily identify the existence of such legacy patches, then it is not much of a concern whether the patches confuse aircraft detection models. In subsequent posts, we will explore whether “stealthy” patches can be designed that are difficult to spot with advanced object detection algorithms, and how much variability is necessary to ensure stealthiness. For now, feel free to explore further details in our arXiv.