Hello! This blog is part of a series of blogs around the AI Sonobuoy project by IQT Labs—a learn-by-doing exploration in maritime situational awareness. Please visit the first blog in the series here.

As part of our exploration into AI in the maritime domain through the AI Sonobuoy project, we arrived at several mental models that can be broadly applied to AI at the Edge. In true IQT Labs fashion, we are looking to stress test and improve these mental models, so come join the conversation and reach out to let us know what you think.

If you’d like to learn more about current or future Labs projects, or are looking for ways to collaborate, contact us at info@iqtlabs.org.

High-Level Overview

The mental models we will explore here were derived during development of the AI Sonobuoy project. By presenting them early in the series, we want to highlight the organizing hypotheses that we are applying to AI modeling at the Edge. These models were derived from the Labs’ learn-by-doing approach to emerging technology verticals and present pragmatic abstractions that can be tactically applied to EdgeTech AI.

This post also discusses how we think about the cascade effects of iterating on various parts of the AI pipeline, how to think about neural network architectures at the Edge, and the tradeoffs to be made when using open-source software as well as commercial off-the-shelf (COTS) hardware.

Mental Models

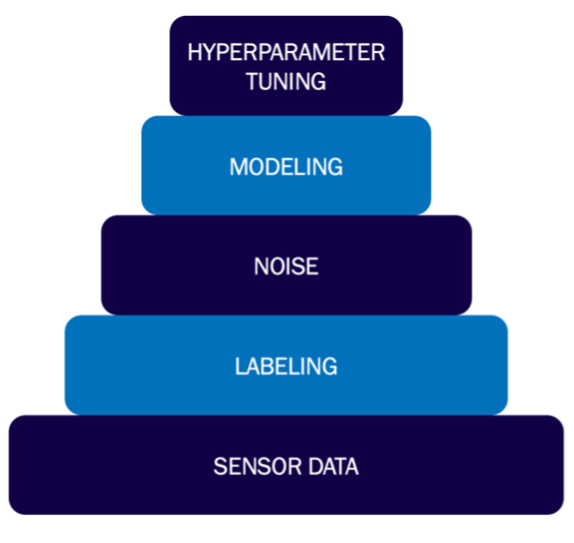

Hierarchy of AI Modeling

Similar to Abraham Maslow’s “Hierarchy of Needs,” we have developed what you might call a “Hierarchy of AI Modeling,” which is depicted in the graphic above. Like Maslow’s version relating to human needs, our AI version ascribes a higher importance to the blocks that are lower down. In other words, these blocks have a greater weight in determining the quality of AI models than those above them, which is why we have placed greater emphasis on iterating at these lower levels.

The sensor data layer deals with keeping raw data inputs at high availability—in this case, hydrophone audio and Automatic Identification System (AIS) messages—resulting in minimal data loss. Moreover, understanding the quality of the sensor data helps to calibrate effective range and threshold downstream.

Given the importance of this foundational layer, investments in improving the fidelity of sensor data should have the greatest impact on the quality of modeling that can be derived from the data. It’s worth noting that this scenario does not necessarily include improving the resolution of the data, but rather places a greater emphasis on characterization. A higher resolution should simply increase the effective range.

The next layer up, Labeling, refers to a requirement for supervised machine learning that requires the association of sensor data with a classification corresponding to that data. The AI Sonobuoy hydrophone audio was labeled using AIS messages that were captured concurrently.

Then comes Noise. Feature-engineering approaches help minimize noise and maximize signal in the data representation that the model uses to distinguish different classes. This can have great propagational effects—especially in Edge settings, where model architectures are heavily restricted by memory constraints.

Finally, model architecture and hyperparameter tuning typically have the smallest, but still significant, effects on the efficacy of the AI model.

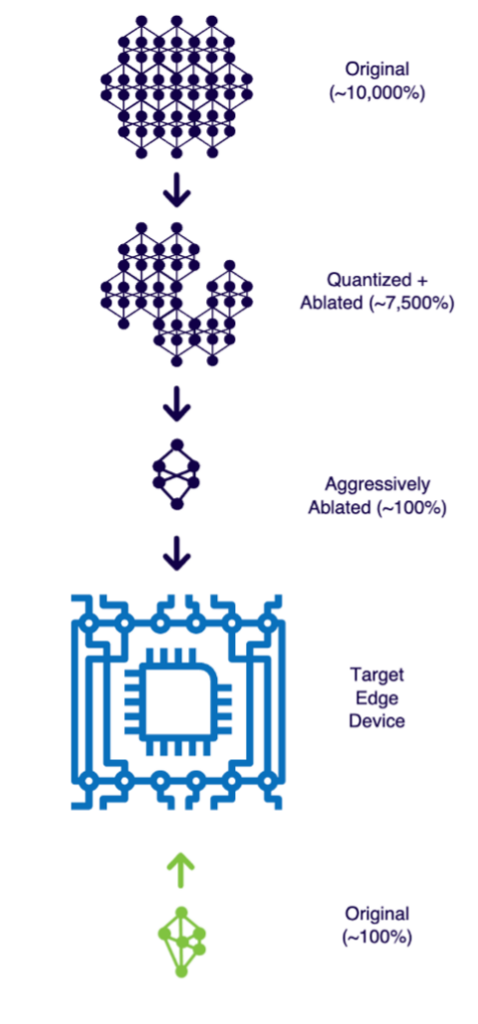

Top-down vs. Bottom-up Modeling

Unlike with typical software, decreasing model memory size (the main limiting factor when it comes to Edge deployment of AI models) typically results in significant decreases in model performance because it requires ablating perceptrons (the discrete components of neural networks) or networks of perceptrons. This makes the typical top-down approach to AI model development—where the most performant model is created and then placed on the device—impractical without preserving the learned information.

Instead, the bottom-up approach, where the model architecture is dictated by the memory constraints of the target device, provides the most promising avenue for extracting the maximum performance out of Edge-deployed models. The crux of the bottom-up approach is that no ablation needs to occur, so all learned information is preserved. It’s worth noting that, based on the depth of the network and the amount of high-fidelity data available, less learning is likely when the device memory is constrained. Nevertheless, this formula still likely represents the optimal tradeoff for Edge modeling.

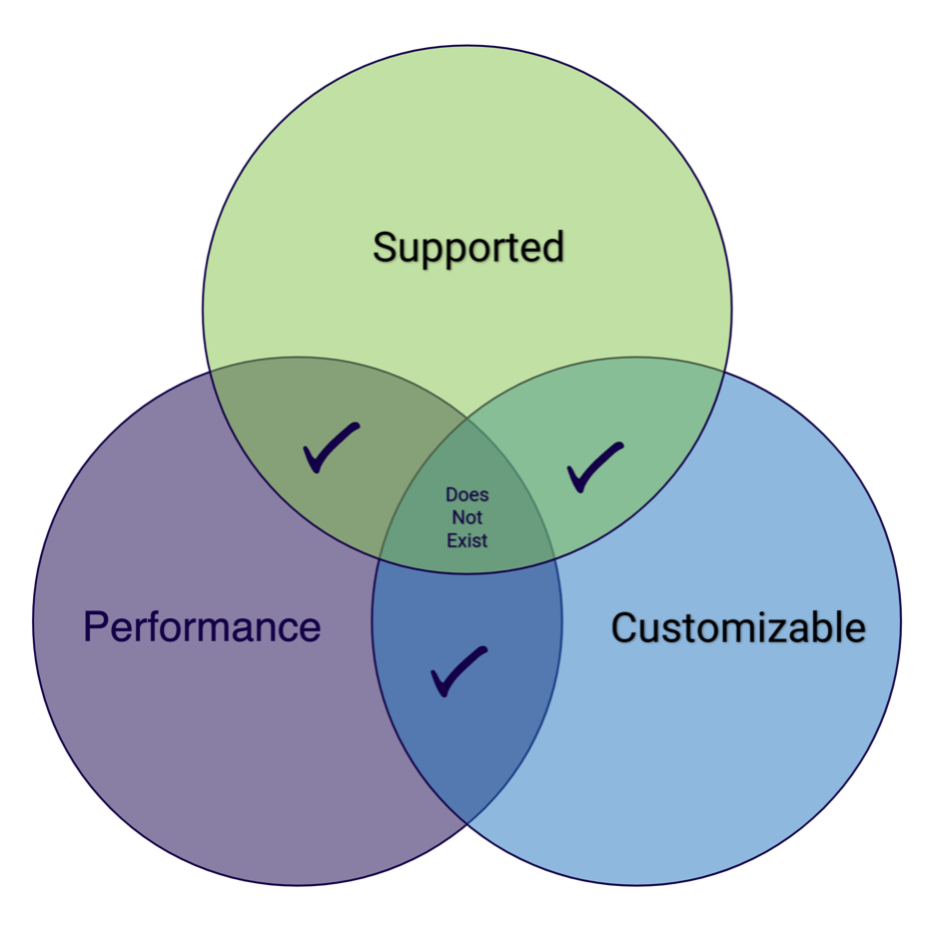

Performance vs. Integration Tradeoff

Some low-level bugs should be expected when using “maker grade” hardware. These platforms are intentionally open and often flexible for users to experiment with, but such advantages often come at the cost of performance and stability. Commercial software, on the other hand, benefits from the thousands of hours that have been spent improving stability and performance for selected features. This, though, inherently narrows the capability and supported functions to ensure such stability. Balancing supported functions (ease and stability of implementing new features), performance (the ability to perform specialized tasks efficiently), and customization (the ability to perform many different functions) becomes part of the decision calculus for hardware and software.

These tradeoffs in design decisions have coupled hardware-software effects. Implementing new software features on specialized hardware would require significant developer effort, as would requiring strict performance on less constrained hardware. Alternatively, choosing specialized software libraries can constrain supported hardware and/or significantly narrow customization. These tradeoffs are summarized in the graphic below.

Currently we see maker hardware focusing on support and customization, whereas commercial hardware excels at support and performance. Total cost and development time can drive both categories; however, there is typically a non-linear effect on each tradeoff category.

Making such a design decision early can speed up initial development, but it comes at the risk of incurring unexpected technical debt as projects mature. Throughout our AI Sonobuoy project, we have found that experimentation and rapid design cycles have allowed us to quickly identify device features and capabilities that contribute most to overall project value. The associated hardware and software are then more extensively optimized to leverage these features and enhance project impact.

IQT Labs Perspective

Deploying AI models at the Edge, especially performant neural networks, is a tall order. The intricate, and often black-box, nature of working in Edge AI leads to significant technical risks that are baked-in through the use of various hardware and software abstractions required to move quickly.

Still, there is tremendous potential in this domain that remains largely untapped. We hope that the mental models we’ve shared here—and our concept of an emerging AI Hierarchy of Modeling—will help direct efforts in the space intelligently and effectively, so you don’t need to struggle where we did.

As with all mental models, though, these were derived through experimentation and—also in true IQT Labs fashion—we are already looking to break them! If you have contrary perspectives, we’d love to have you come join the conversation.