Hello! This blog is part of a series about the AI Sonobuoy project by IQT Labs—a learn-by-doing exploration in maritime situational awareness. Please visit the first, second, and third blogs here:

- IQT Labs AI Sonobuoy – A Deeper Look at AI at the Edge

- IQT Labs Identifies AI Hierarchy Of Modeling That Accelerates Edge Intelligence

- The Hard Stuff: Designing a Cheap-Yet-Powerful Maritime AI Dataset Collector

When using Automatic Identification System (AIS) messages to auto-label data, we encountered several issues. This blog gives a primer on AIS and then discusses the various challenges that need to be addressed to make AIS messages viable auto labels.

If you’d like to learn more about current or future Labs projects, or are looking for ways to collaborate, please contact us at labsinfo@iqt.org.

High-Level Overview

Meet Bob and Doug. While these vessels look identical, their AIS messages recorded by Marine Traffic report two different vessel-types: “Tug” and “Cargo.”

The AI Sonobuoy Collector was created to auto-label underwater audio data of ships by time, correlating hydrophone audio with AIS data for use in machine learning. Now, if ships that should have almost identical acoustical signatures given that they are identical are reporting two distinct ship types, this lowers the quality of the generated dataset. As we noted in a previous blog in this series—The Hierarchy of AI Modeling—label quality has significant downstream effects.

In this blog, we give a primer on AIS and then break down labeling issues that arise when using AIS into three subcategories. These were used to inform how we rectified the auto-labeled dataset so that AIS could be considered a viable auto-label source.

How Ships’ Automatic Identification System (AIS) Works

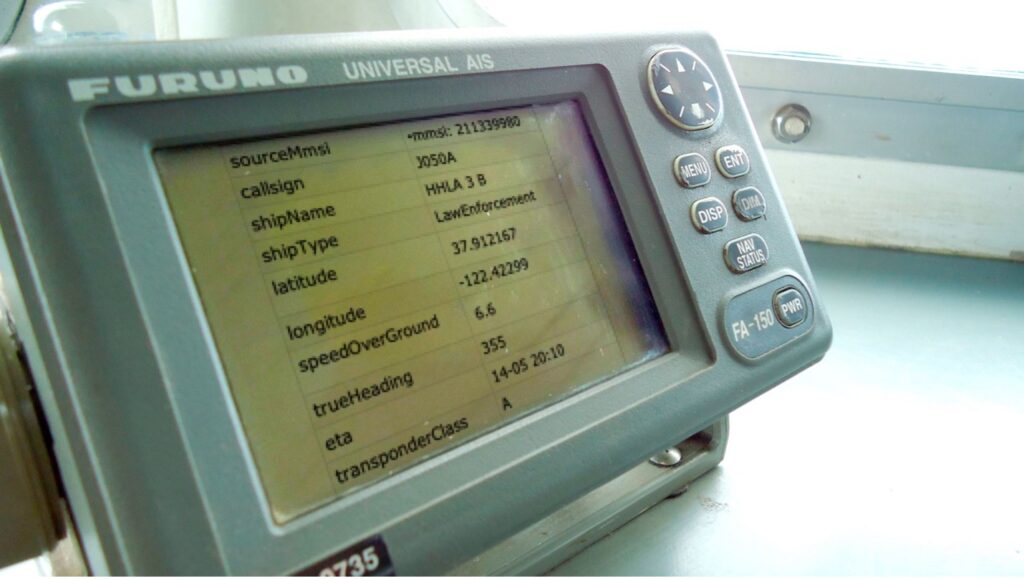

AIS is an obstacle-avoidance system for ships. Each AIS message contains a Maritime Mobile Service Identity (MMSI). For all vessels, the MMSI number is the unique identifier for a ship in AIS messages.

There are two classes of AIS: Class A and Class B. A passenger or large cargo ship, for example, is considered Class A, and a small ship or pleasure craft is considered Class B. Based on the class of AIS, different messages are required to be broadcasted and at different frequencies.

For labeling purposes, both classes of vessels are required to broadcast their ship type. AIS messages also contain positional information in terms of the latitude and longitude of the current location of the broadcasting vessel. This makes it possible to partition data by distance from the buoy.

More information about AIS can be found in the “Additional Resources” section of this blog.

Three Challenges that Arise from Using AIS…

To highlight vessel-type discrepancies, we used an external data bank of AIS data provided through AccessAIS, a collaboration between the Bureau of Ocean Energy Management, the National Oceanic and Atmospheric Administration, and the US Coast Guard. We then compared the AccessAIS data in aggregate against our AIS collect.

The location of the AccessAIS collection station was not the same as the AI Sonobuoy and the two recorded at different time intervals, making it difficult to compare discrete messages. Still, comparing the two separate sets of data in aggregate helped us identify several categories of AIS discrepancies:

- Broadcast-Side Transmitter Misconfiguration

If, during the AIS transmitter setup, messages about the vessel are misconfigured for some reason, the collected data will reference the incorrect vessel-type.

This means that even if the AI Sonobuoy Collector recorded AIS messages from two identical ships and decoded them correctly, the underlying dataset would still be muddled. The two identical ships would have very similar acoustic signatures but would be auto-labeled as two different classes. In turn, this would have harmful downstream effects on the machine-learning and data-visualization pipelines if vessel-type is used as the label.

- Collection-Side Missing Messages

Missing messages on the collection side can result in two different issues: mislabeling a recording as ambient noise rather than as a vessel or mislabeling a recording as only containing a single ship when there’s actually more than one.

In the case where there are no ships near the buoy, we used the heuristic to label these segments as the ambient noise of the environment. If the AI Sonobuoy Collector missed recording an AIS message from a ship near the buoy, that segment would incorrectly be considered ambient noise.

Similarly, when there is a ship recorded via AIS data but messages from additional ships nearby are missed, audio segments from the AI Sonobuoy that have two or more signals are only labelled as a single one, thus muddling the dataset.

- Decoding-Side Deciphering Errors

AIS messages are broadcast as byte strings and are decoded on our device in real-time before being uploaded to the cloud. Incomplete or improperly-decoded messages are discarded by the software architecture. Based on our AI Sonobuoy Collector V2 design, these decoding errors are difficult to diagnose.

…And How We Resolved Them

In our data-first AI Sonobuoy Collector software revision, which we’ll be discussing in detail in a blog post next week, the originally-captured AIS message byte strings are pushed up to the cloud immediately, where they can be post-processed. This allows us to identify decoding errors by comparing the raw input with the decoded output.

To rectify the other AIS issues, we used an external ledger that included MMSIs, the AIS vessel-type, and images of the craft. A combination of the three data sources allowed us to identify discrepancies in our dataset and correct them, making the training of a useful machine-learning model possible.

Stay tuned for a future blog where we discuss the machine-learning pipeline.

IQT Labs Perspective

The heterogeneity of vessel-type component AIS labels masks ground truth, inherently undermining efforts to distinguish different classes of vessels.

We found a way to mitigate this problem by using an external data source with the unique identifier in AIS messages and images of vessels to rectify the ship-type labels. Although this adds a significant burden on humans in the loop, it produces better quality labels. Higher quality data and more accurate labels have a cascade effect on the rest of the ML pipeline and allow for an end-to-end, closed-loop collection-and-detection pipeline with minimal human involvement.

In future work, we will be adding a camera to our system. Images from it will be correlated with AIS data and hydrophone audio. This should further improve label quality, allowing us to build more sophisticated machine-learning classifiers. If you’ve done something similar or are interested in working with us, please do reach out at info@iqtlabs.org.

Additional Resources …

- Navigation Center U.S. Coast Guard AIS Requirements

- Navigation Center U.S. Coast Guard Types of Automatic Identification Systems

- U.S. Coast Guard AUTOMATIC IDENTIFICATION SYSTEM USCG AIS Encoding Guide

- Navigation Center U.S. Coast Guard, “Class A AIS Position Report (Messages 1,2, and 3)

- Marine Cadastre AccessAIS a BOEM, NOAA & USCG Partnership